What’s the latest in AI tools? After months of new software making significant waves in the creative industry, Adobe has finally launched its own AI image generator. While still in the beta stages of development, the debut of Adobe’s Firefly & Generative AI marks a significant enhancement to the creative suite already in use by millions. So rather than paying for and downloading additional products, such as DALL-E or MidJourney, designers now have the tools of AI at their fingertips within Photoshop, Illustrator & Premiere applications.

Much like other AI tools on the market, Adobe’s product allows users to type in a prompt and have an image created in return. But one critical difference is Adobe is one of the few companies willing to discuss what data its models are trained on, and eliminates the controversial copyright concerns. According to Adobe, every asset being fed into its models is either out of copyright, licensed for training, or in the Adobe Stock library, which the company has the right to use. This gives Adobe’s product an additional leg up in the AI race as there is no risk of copyright or trademark infringement which has been creating much controversy amongst the design community. VP of Generative AI & Sensei, Alexandru Costin, claims, “We can generate high-quality content and not random brands’ and others’ IP because our model has never seen that brand content or trademark.”

So is it worth the hype? Bluetext’s designers took a tour of the beta program to test its capabilities and pressure test some potential applications. Our main takeaways? Well, like any AI tool on the market, there are obvious advantages, but still notable weaknesses proving AI technology is indeed a work in progress and never a full replacement for human abilities.

Let’s explore some of Bluetext’s experiments with Generative AI.

One of the most impressive feats of Adobe’s generative fill is the ability to enhance imagery with additional subjects. So what had previously taken creative hours to clone, align, fill, and blend imagery. But now, AI tools can accelerate that process by enabling a designer to select an area, type in a prompt, and receive three results to select from. For example, we took this photo of simple but scenic mountains.

We asked Adobe AI for a series of additions, including more mountains, a cliffside mansion, and even a goat.

Overall we were extremely impressed with the tool’s ability to blend new creative into the previous scene, as the new mountains and mansions were almost a seamless match to the focus, lighting, and textures of the original scene. While not all of the generated options were a great fit for our requested prompt, the majority of outputs fit the requested prompt pretty spot-on.

So with that experiment’s success, we decided to pressure test some more difficult subject matter. One of the core obstacles to AI-generated imagery tools is the inability to produce realistic and believable human subjects. AI tools often have what is called the “uncanny valley” effect. This phenomenon refers to the natural emotional response of eerie or unsettling feelings that people experience in response to not-quite-human. The majority of people have an aversion to the imagery that is meant to appear human but with some caveat of disproportion or unrealism. This phenomenon occurs often with computer-generated imagery that technically appears human, or humanoid, but has a “can’t put my finger on it” inconsistency that appears robotic or unnatural. After the prompt in Adobe AI to generative fill in “a man/family eating ice cream” into the amusement park scene below it confirmed our hesitancies.

The generated responses yielded a mix of graphic styles, resulting in distorted human photography and clip-art-esque illustrations. Facial features and phalanges were expectedly distorted, as these are the features that are most difficult to create hyper-realistic depictions.

While adding people to the scene created challenges, we found removing people was very realistic. The removal of foreground elements is a significant strength in Adobe’s tool, as it cuts out significant time to remove elements from a scene and recreate backgrounds. Our result generated a perfect match to the park’s backgrounds in a matter of seconds, with impressive accuracy and attention to detail that would have taken any designer hours to complete.

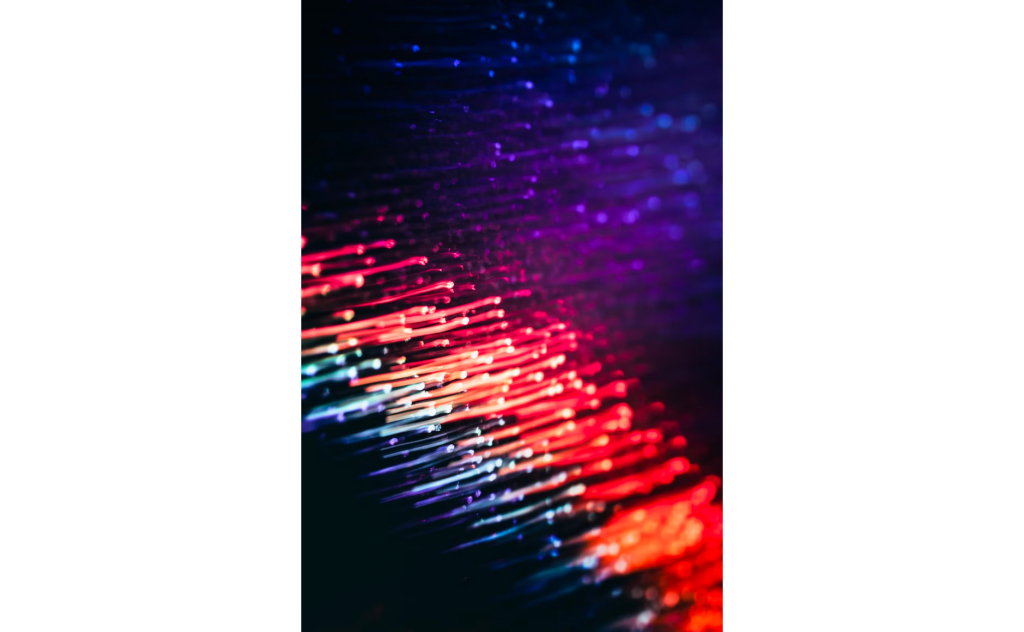

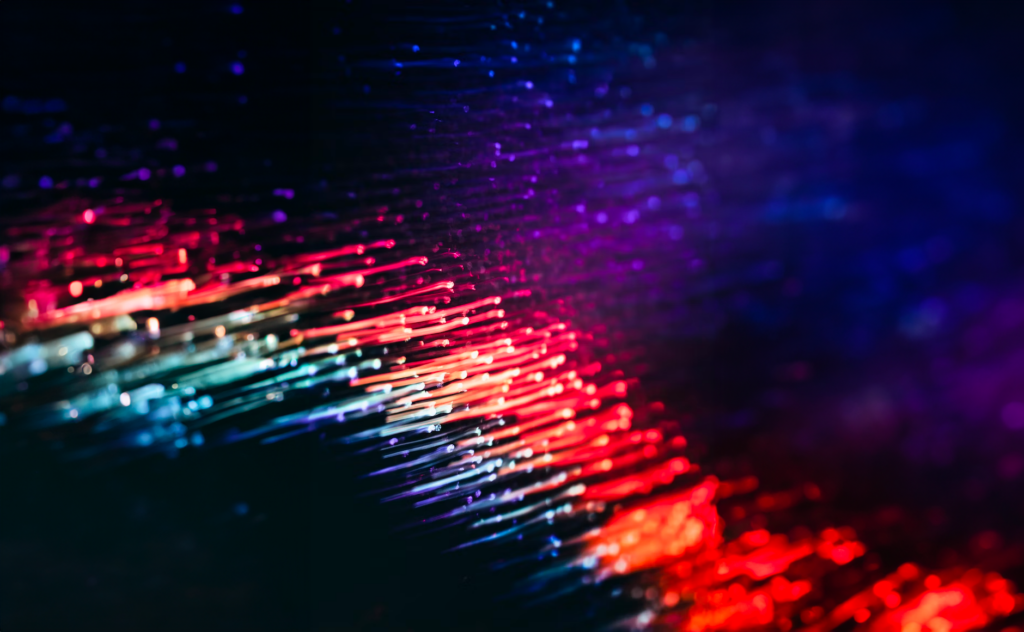

Another powerful potential application of generative fill is the resizing of imagery for specific proportions. For example, say you found a great image you want to use as a website hero zone background. The only problem is this selected image is portrait orientation, making it incredibly difficult to adapt to wider landscape or even square formats. Adobe’s generative fill allows the user to select specific areas, and generate that exact texture, background, or graphic elements across a new canvas size. Especially for abstract and texture-based imagery, this seamlessly recreates the design to fill negative space. The previously limited portrait image is now croppable and has more flexibility to be scaled to various formats for websites, display ads, social platforms, and more.

Overall, our stance on AI-generated design tools remains the same. There are undeniable advantages to accelerating tedious tasks and supporting ideation phases, however, still require a significant amount of human attention and editing. AI tools are not, and likely will not, ever be a full replacement for human talent and still face severe limitations in creating certain subjects like human faces or fingers. AI tools are much more adept at scenery, abstract subjects & textures and when used correctly can be a powerful addition to your creative team.

Interested in AI tools? check out our Midjourney case study and how generative AI can enhance ideation.