The generative AI era

It’s challenging to go a full 24 hours without talking, hearing, or reading about AI nowadays. And businesses are trying to understand the best ways to use AI and what they should be more cautious about.

Since ChatGPT’s arrival, enterprises have focused on how generative AI tools can play a role in content creation. Inevitably, this has opened a discussion around the risks of generative AI. Questions about data security, intellectual property, and training data are everywhere. So we wanted to find out what concerns enterprises most when it comes to generative AI, and how they think large language models should be trained.

We surveyed a mix of Acrolinx Fortune 500 customers (including Amazon, HPE, Humana, Fiserv, and Intralox) and other enterprises. Our final data set included 86 responses. Let’s take a look at the findings.

Is security a top concern?

So much of the discussion around generative AI has centered around security. We thought it was important to question whether security is a concern that influences enterprise decisions about generative AI.

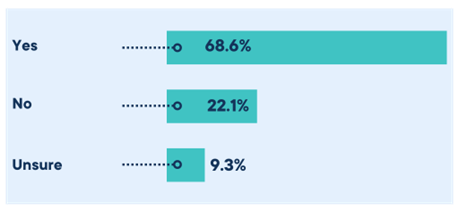

We asked: Do security concerns influence your decision to use generative AI? And the results were:

The majority of respondents (68.6 percent) said that security concerns have influenced their decision. 9.3 percent were unsure if it had influenced their enterprise’s decision, while 22.1 percent claimed it didn’t impact their decision to use generative AI. This is in keeping with current discourse, as the majority view it as a concern.

It’s easy to guess the reasons why. Security measures can impact customer trust and affect whether or not prospects want to do business with you.

What’s your biggest concern?

Enterprises do have a lot of concerns to consider when it comes to generative AI systems. Anything from the code generated being incorrect and copyright infringements, to sensitive information being shared or the plethora of AI ethics issues. So as a follow up to our security question, we wanted to dive deeper into the biggest concerns of enterprises and find out what concerns them the most.

We gave the survey respondents five areas of concern: intellectual property, customer security compliance, bias and inaccuracy, privacy risk, and public data availability and quality, and asked them to rank them.

Of the 86 respondents, 25 selected intellectual property as their biggest concern. This was followed closely by customer security compliance concerns, which received 23 votes. Comparatively, the other three answers (bias and inaccuracy, privacy risk, and public data availability and quality) received between nine to 16 votes each.

The concern that was ranked the lowest by most respondents, at 43 votes, is privacy risk. However, as more people identified it as the biggest concern over public data availability and quality, it has a higher average.

It’s worth noting that there’s only a 1.23 point difference between the highest and lowest result. Without a clear largest concern, it’s fair to say that all the listed concerns are part of the decision-making process for enterprises.

Do enterprises restrict current generative AI use?

We’re curious about how many enterprises restrict the use of generative AI by their employees.

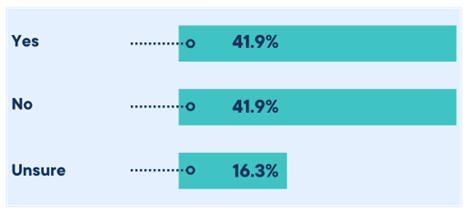

The results show that an equal percentage of respondents said their enterprise restricts versus doesn’t restrict generative AI — 41.9 percent. It’s surprising that a staggering 41.9 percent of enterprises have no restriction on the use of generative AI.

Notably, 16.3 percent are unsure if their enterprise restricts generative AI use. It’s easy to assume that without clearly communicated use guidelines, those respondents who are “unsure” could be using generative AI, but their organization might not sanction it. Taking into account the established security concerns, it’s surprising that over 40 percent of respondents have unrestricted use of generative AI.

Training generative AI models

Considering all the areas of concern that enterprises have to factor into their decision-making process, we wanted to know how enterprises would prefer to train their generative Artificial Intelligence models.

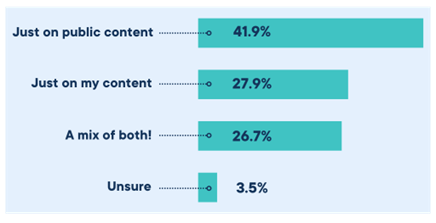

So we asked: How should your generative AI model be trained?

Surprisingly, the majority of respondents, 41.9 percent, would rather use an AI model that trains on publicly available content. Just over a quarter of respondents would like to train their model on just their own content. And likewise just over a quarter of respondents would like a mix of both their own content and publicly available content, while only a small percentage (3.5) were unsure.

The preference for public content speaks to the widely available digital content that generative AI tools can use to train machine learning models. Though it remains surprising that enterprises aren’t more focused on using their own content to build models — especially when you factor in all the concerns we’ve discussed.

As generative AI is still an emerging area, it’s fair to say that people are still forming their opinions about it, and these could change over time. But as there’s so much available public data, enterprises might be hoping it will advance their AI capabilities.

Where do enterprises stand with generative AI?

One question that we haven’t covered in this blog is if enterprises are adopting generative AI and the answer is yes — overwhelmingly so. Out of 86 respondents, 82 of them were either already using generative AI to create content or have plans to do so soon. So we know that business leaders and enterprises are embracing generative AI tools with enthusiasm.

With AI applications, the potential for increased content velocity is exponential. And with so much opportunity available, it’s easy to see why enterprises want to be early adopters of this technology, despite all the potential concerns.

If you want to know what types of content enterprises want to use generative AI to create, whether enterprises currently restrict the use of generative AI, and how enterprises want to train their generative AI models, make sure to download our free report. Or, if you want to learn how AI Enrich for Acrolinx allows you to use generative AI confidently and safely click here.