Should You Run Simultaneous Experiments? A Guide to Avoiding Conflicting Results

There’s some debate in the optimization world about whether or not to run simultaneous experiments. Some believe that running simultaneous A/B tests will muddy your results and produce inaccurate data. Others argue that running A/B experiences on various pages of your website at the same time can help you test more things and identify winning strategies faster.

So, which is right?

In this blog post, we’ll explore the benefits and drawbacks of simultaneous experiments – and help you decide which approach is best for your optimization program.

After reading this blog article, you will be able to answer the following questions:

- Can I run simultaneous split URL experiences?

- Can I run simultaneous A/B experiences?

- Can I run an A/A experience and an A/B experience at the same time?

The short answer is yes, multiple experiences can run simultaneously on a single page or set of pages. But, keep in mind that bucketing in one experience may have an impact on data from another simultaneously occurring experience.

How Does Experience Overlap Happen and Should You Be Concerned?

There is one thing to keep in mind when running simultaneous experiments. In some cases, two changes may interact, resulting in a different effect on behavior when combined than when isolated. This may occur when experiments are run on the same page, with the same user flow, and so on.

Let’s look at some examples of where experience overlap might happen and if it should be considered a problem.

Testing the Same Element

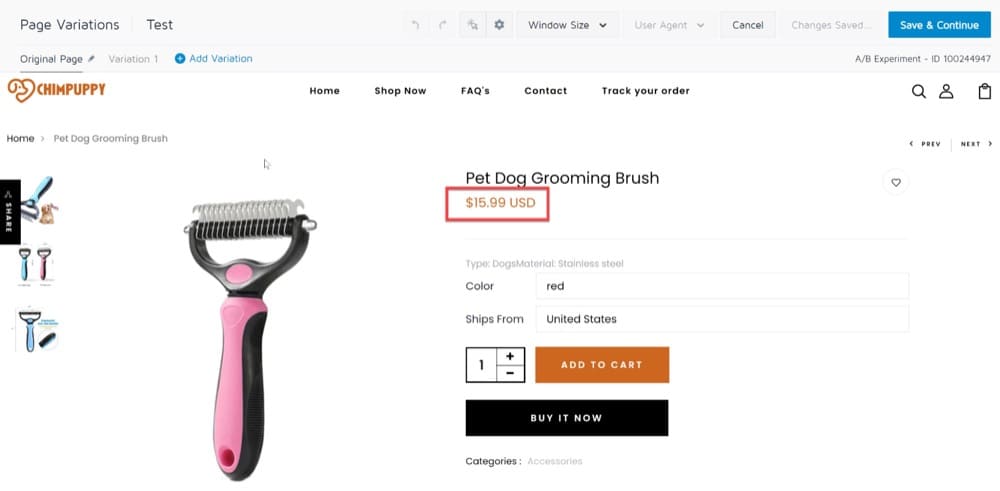

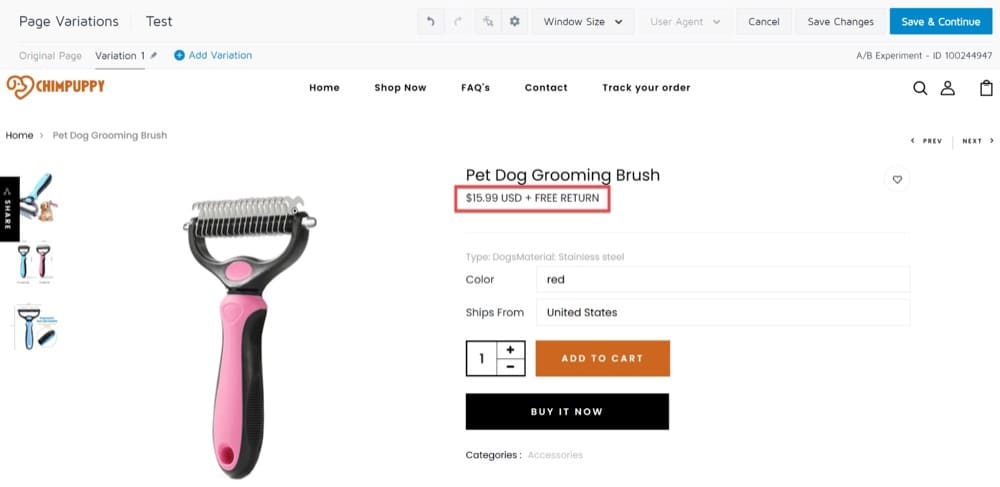

Switching up the design of your product pages to highlight reward features, such as a free return policy and free delivery, is one example of an A/B test you can run.

One of our customers tested this exact scenario. Based on data from their customer service department, they hypothesized that customers weren’t aware of the brand’s free return policy because the feature wasn’t visible enough on product pages. They then ran an A/B test showing the feature more prominently and measured how customers responded.

Here is what the original and variation look like:

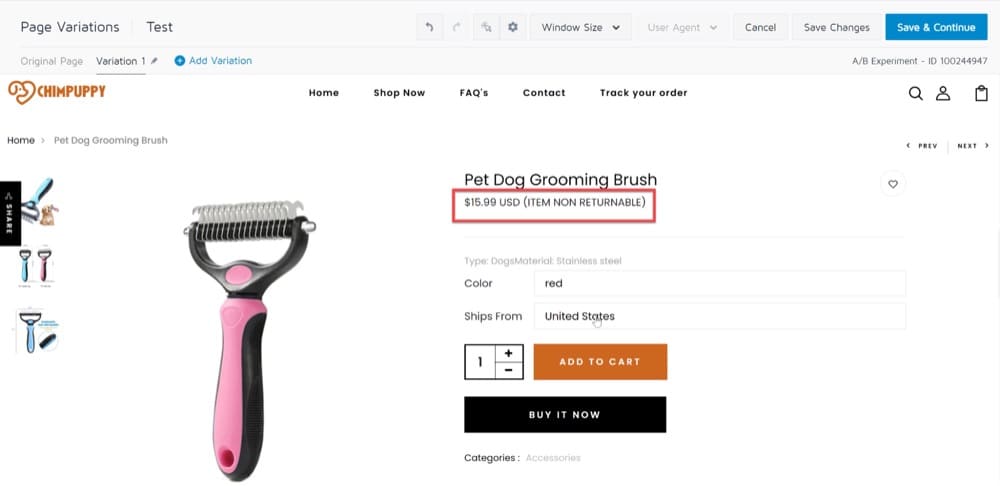

However, the test’s implementation was a bit more complex since changes were not to be applied to every product page. Some products weren’t eligible for free returns, certain on-sale items couldn’t be modified, etc. For these reasons, they decided to run another A/B experience in parallel changing the same element and adding a disclaimer copy on many of these pages saying, “Item is non-returnable”.

As you can see, the two A/B experiences are affecting the same website element and therefore causing some sort of overlap in the results, making it difficult to draw clear conclusions.

Testing on the Same Page

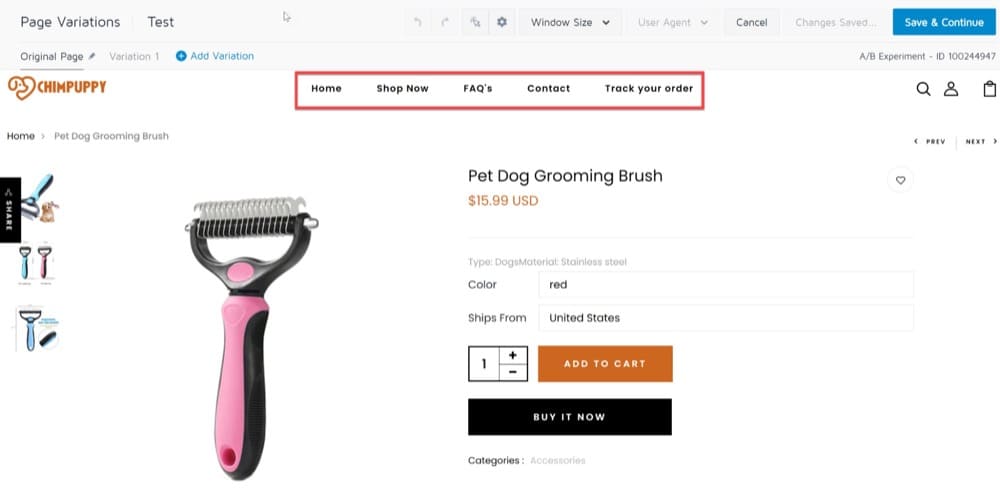

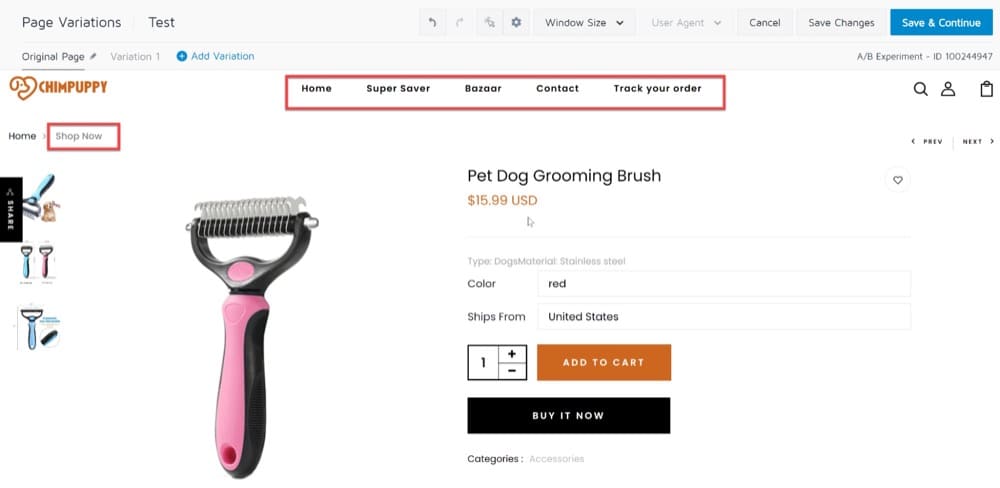

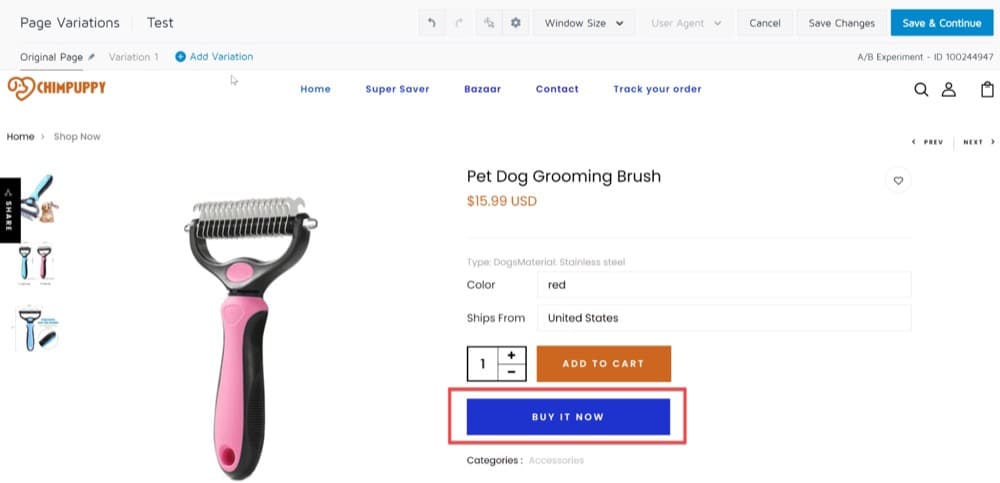

Another example of an A/B experience was when a customer of ours optimized their product pages to increase visits-to-order.

As they analyzed each element of the product pages and tracked the goal conversions, they found that the main navigation bar links received the most clicks, especially “Shop now”. Our customer recognized the importance of sending more qualified traffic to category pages rather than leaving them wandering on the home page.

As a result, the customer decided to replace the “Shop Now” section with other categories such as “super saver”, “bazaar”, and so on. Additionally, the “Shop Now” section was moved to the site’s left side to make the page more visually appealing and attract qualified visitors.

This is how the product page looked at first:

Meanwhile, another A/B experiment was being conducted on the product pages to determine if a different color of the “Buy now” button would result in better conversions.

Because these two A/B experiences are affecting the same elements on the same page, some overlap is inevitable in the results.

Testing Users Participating in the Same Funnel/Flow

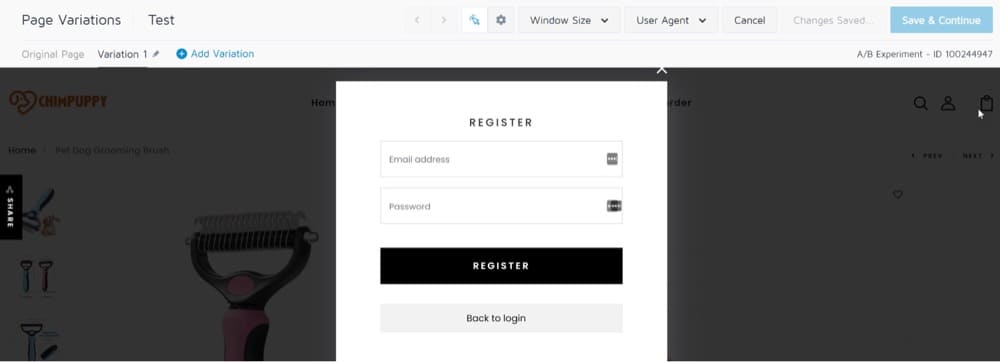

Experience overlap might also occur when testing users participating in the same funnel. Most websites drive conversions through multiple funnels. While the primary focus may be on purchases, account creation or acquisition can also be a significant driving force in the business.

Running experiences on a product page is likely to have an impact on purchase conversion; however, testing the form layout on an account creation page can help improve that funnel. Acquisition testing includes everything from driving traffic to the site to gathering email addresses for marketing purposes.

Having experiences on the same pages of the website can cause them to overlap, resulting in bugs. Results are likely to be impacted if experience goals are aligned with those same funnels.

Let’s say that you’re trying to get more completed signups. Upon landing on your site, users are asked to register:

To set up a conversion funnel for signups, you could track the following events:

- No. of users on signup

- No. of completed signups

- No. of homepage screen loads

You can then formulate several hypotheses on how to improve the funnel by testing the following changes:

- Add onboarding to the signup process

- Shorten the signup form to make it more user friendly

- Remove signup completely

In this case, however, it is not possible to determine the exact impact of a change from A/B testing since the A/B experiences affect the same funnel, so there will be some overlap between their results.

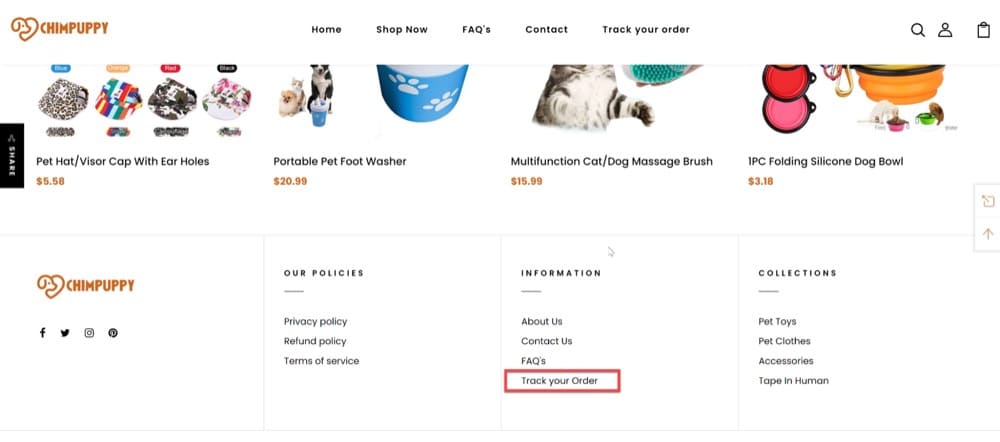

Running Site-Wide Experiences

There may be times when you need to experiment with an element that appears on all pages. Let’s say you want to test changing the color or font size of the footer call-to-action to see how many conversions you can get.

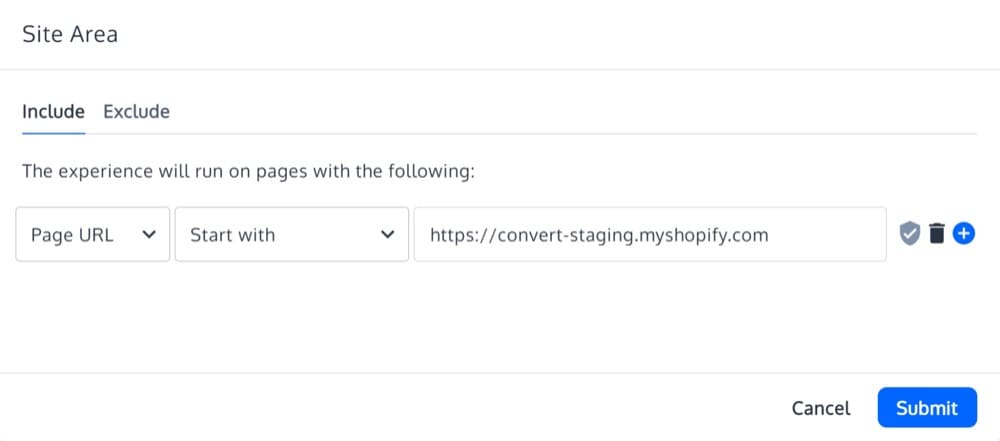

The process is straightforward to implement with Convert: simply add all pages to your targeting.

That’s all!

Site-wide targeting, however, will affect other A/B tests running on those pages, resulting in an overlap in experience.

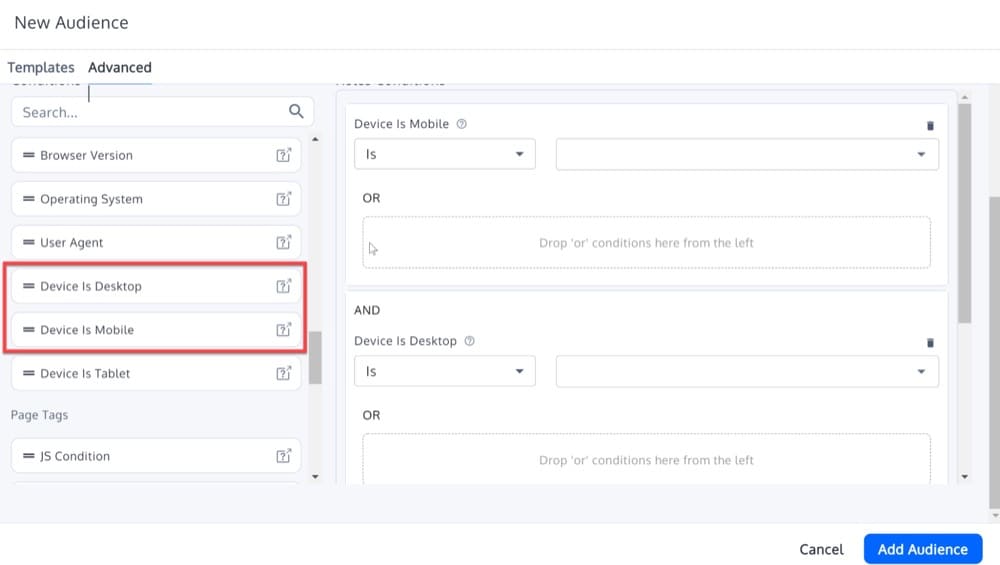

Testing the Same Audience/Visitors

Consider the following case study: You want to evaluate two aspects of your ecommerce system, so you develop two A/B tests for mobile users and desktop users.

- You’re trying to see if making your “Add to Cart” button red instead of blue increases clicks.

- You’re trying out a new checkout process that cuts the number of steps down from five to two to see if you get more signups.

If both actions lead to the same success event (a completed transaction), it can be difficult to determine whether the red button or the better checkout experience boosted conversions on desktop and mobile devices.

To avoid overlapping results and other experience delivery issues, you should run the above tests on different audiences (for example, mobile-only or desktop-only).

The only disadvantage of segmentation testing is that your traffic numbers will be lower, which may affect how long your test needs to run. However, because it is based on personalization techniques, this is the preferred method for avoiding experience overlap when A/B testing. When segments are chosen carefully, their impact on the entire experience will be minimal.

It goes without saying that if your goals are similar across tests, your results will be centered around this individual goal. For each experience to fulfill its purpose, the goals for each mustn’t conflict with each other.

Strategies for Running Successful Tests

There is no one-size-fits-all solution when it comes to running tests that don’t overlap. As you move through each stage of your experimentation journey, your needs will dictate how you proceed.

To help you make an informed decision, let’s go over the most common strategies you can use to deal with overlap.

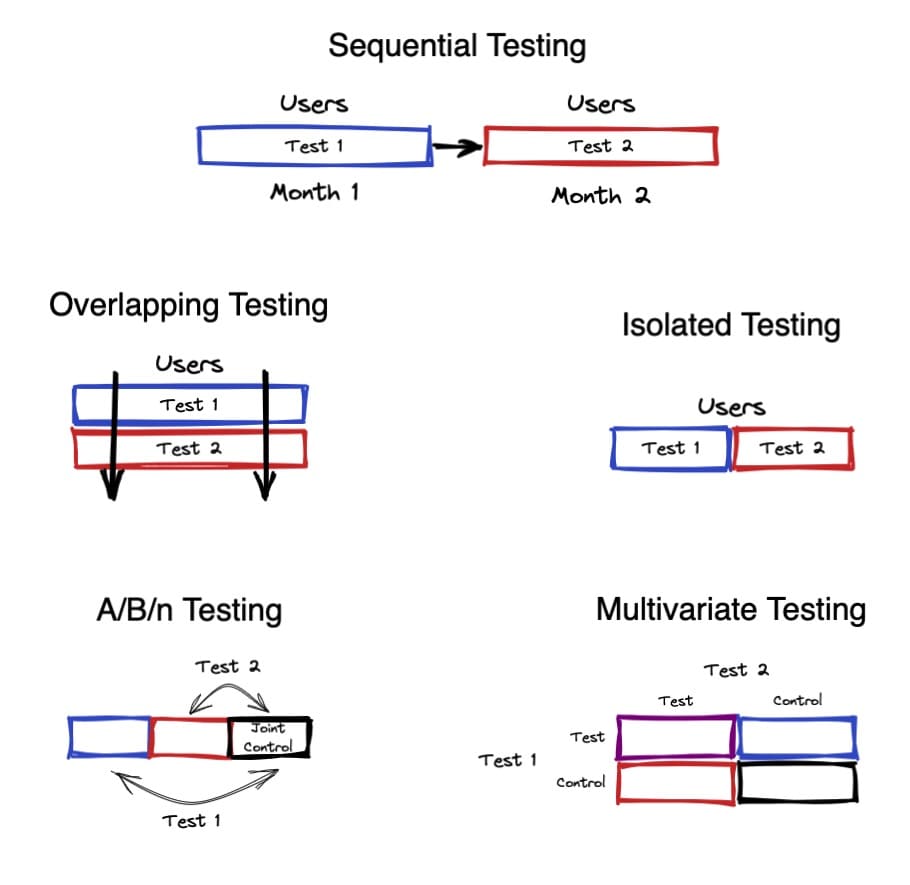

1. Simultaneous Experiences without Overlap (Isolated)

The most straightforward strategy is typically the one you’ve been using up to this point: isolated experiences that run simultaneously.

As we discussed above, isolated experiences have no overlap and the outcomes of one experience won’t affect the outcomes of another.

The following cases require this strategy:

- When overlap is technically impossible: if you are testing in a way that excludes all the possible overlap combinations mentioned above.

- When user experience can be broken: Some combinations of experiences can ruin the user experience, so these experiences must be run separately.

- When the primary goal is a precise metric and so only isolated experiments make sense.

In these cases, there is no way one experience can affect the other if you run two experiences concurrently on two different pages with two different goals. Visitors who take part in experience 1 won’t take part in experience 2, and vice versa.

Beyond the above cases, from an efficiency perspective, executing experiences in simultaneous isolated lanes doesn’t make sense. Running two experiences in separate lanes takes the same time as running them one after the other for any given number of users or sessions. If you have 10,000 users every month and need to run two experiences, each of which requires 5,000 people, it will still take a month to complete the experience.

Besides, this strategy has an obvious disadvantage: running experiences in isolated lanes will undoubtedly prevent investigating potential interactions between variations.

It would be the same as doing an experiment on desktop users before making the winning variation available to both desktop and mobile users if there were separate testing lanes. The impact on mobile users may be the same as on desktop users, but it is also possible there will be a sizable difference.

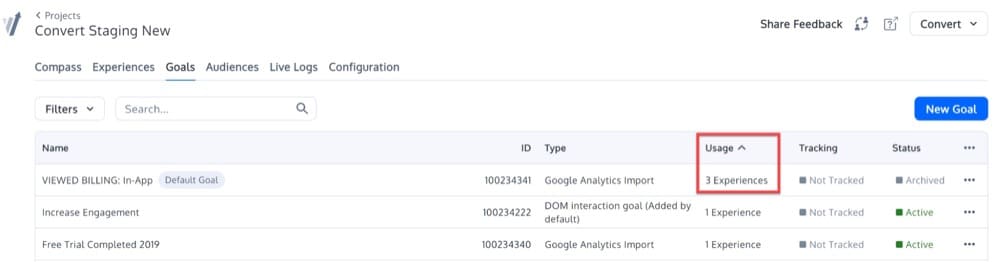

2. Non Simultaneous (Sequential) Experiences

If there is no way to avoid the experience overlap then you should consider using sequential experiences. It means that each experience that has the potential to overlap with another should be run sequentially.

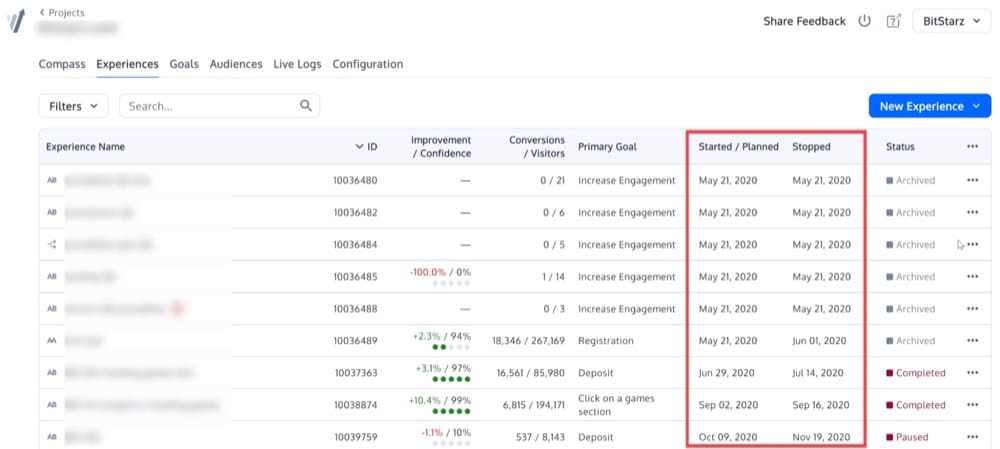

You can use the Convert columns “Started/Planned” and “Stopped” to have visibility over your sequential tests:

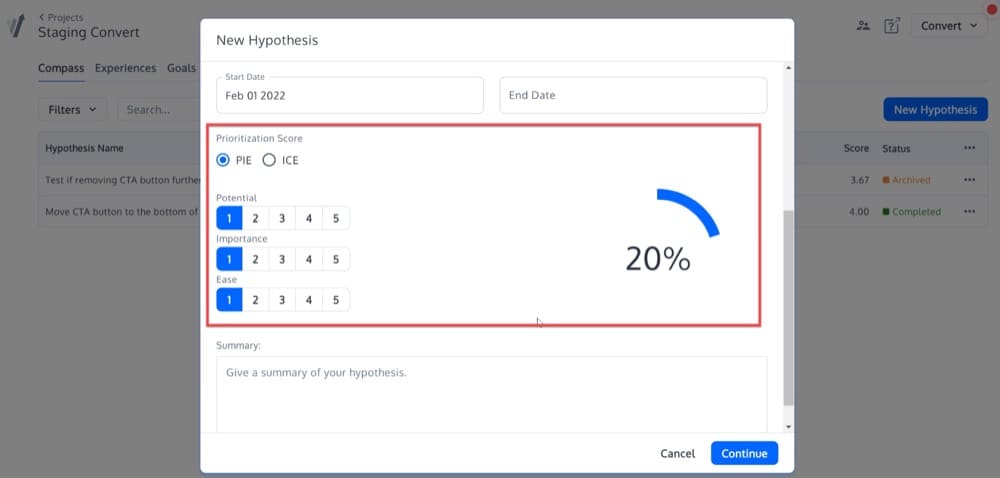

This strategy can be made even more effective with a prioritization roadmap.

PIE and ICE frameworks are two effective options for prioritizing experiences for your team.

The PIE framework (developed by Widerfunnel) is a popular prioritization method that ranks tests based on three criteria: potential, importance, and ease. Using the PIE score, you can rank and prioritize each test based on the average score of each of these criteria.

The Impact, Confidence, and Ease (ICE) model (developed by Sean Ellis from Growthhackers) is very similar to PIE, except it uses a confidence factor in place of “potential”.

Having no roadmap will limit your ability to make the most of your traffic and resources.

It is possible, for instance, to unintentionally accumulate a backlog of homepage ideas that must be implemented one after another. If this bottleneck persists, you may be forced into a waiting game instead of being able to test other parts of your website at the same time. Or you might instead run several tests simultaneously without taking into account any possible overlap effects, which would produce suspect results.

3. Simultaneous Experiences with Overlap

After analyzing your experiences, you concluded they overlap; therefore, it is necessary to isolate them. How do you do that? It’s simple! Run the first test, then the second, right? The sequential section explains how this works.

Imagine, however, that you want to do a few tests during the Christmas period or any holiday season because, for whatever reason, this is when you receive more visitors and the experiences can have a more significant impact. Then what? Are you able to run all your experiences one after another? Obviously, no.

You can run your experiences simultaneously without worrying about overlap using the strategies below.

a. A/B/N Experiences

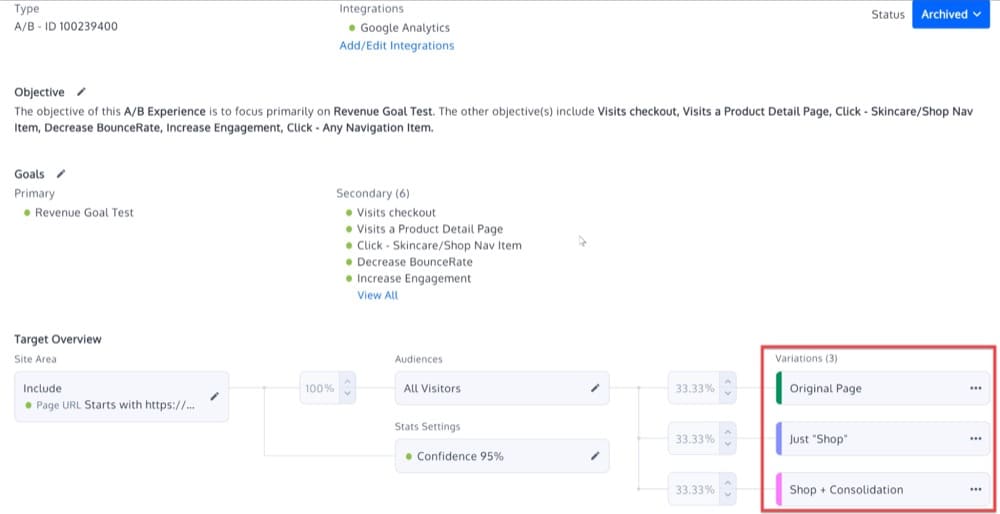

The first strategy under this category is A/B/N testing, which involves testing more than two variations at once. A/B/N does not refer to a third variation, but any number of additional variations: A/B/C, A/B/C/D, and any other extended A/B test.

The principles of A/B/N testing remain the same regardless of the number of additional variations: divide users into groups, assign variations (typically of landing pages or other webpages) to groups, monitor the change of a key metric (typically conversion rate), examine the experience results for statistical significance, and deploy the winning variation.

However, experimenting with too many variations (when only one can be chosen) can further divide traffic to the website. It can therefore increase the amount of time and traffic necessary to achieve a statistically significant result and create “statistical noise”.

It is also important not to lose sight of the big picture when running multiple A/B/N experiments. There is no guarantee that different variables will work well together, just because they performed best in their experiments.

In such cases, consider performing multivariate tests to test all variations and ensure improvements are carried through to top-level metrics.

b. Multivariate Experiences (MVT): Combine Many Experiences in a Single Test

A multivariate experience (MVT) runs numerous combinations of different changes at once.

In order to determine which element, out of all the potential combinations, has the greatest influence on the goals, many elements must be modified simultaneously on the same page.

Unlike A/B/N tests, multivariate testing lets you determine which combination of changes serves your visitors’ demands best. With multivariate testing, you can determine which combination of variables performs best when multiple variables are changed.

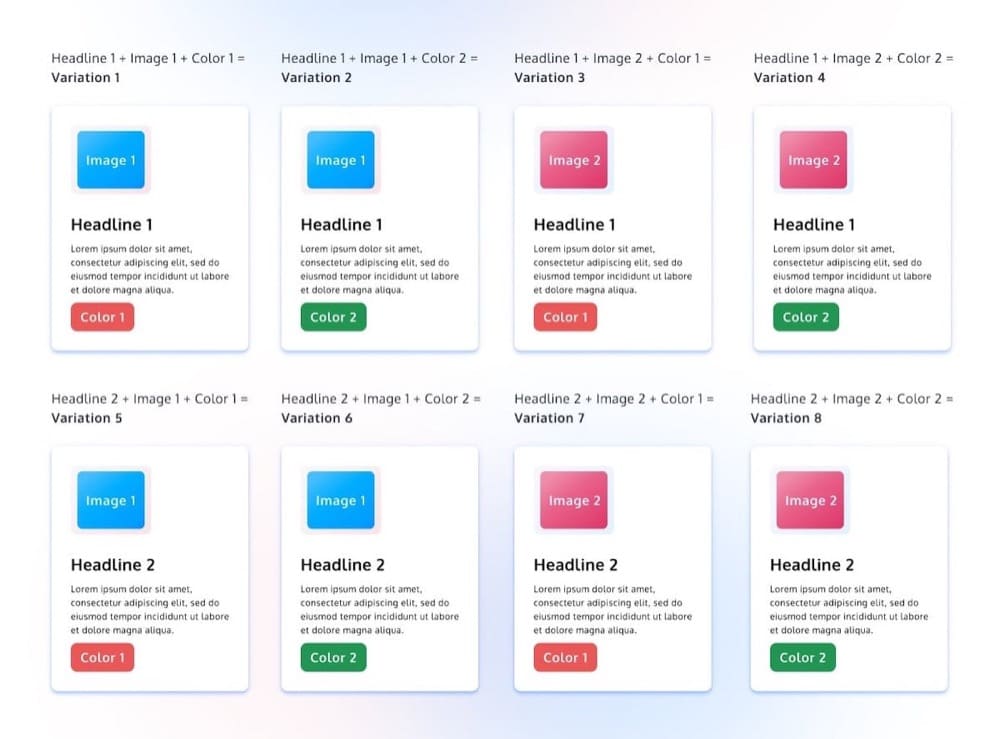

For example, if you want to test two different headlines, two images, and two-button colors on the page, your MVT test will look like this:

The above MVT test tests different elements (headlines, color, and image) simultaneously in different combinations.

How to Set Up an MVT in Convert Experiences

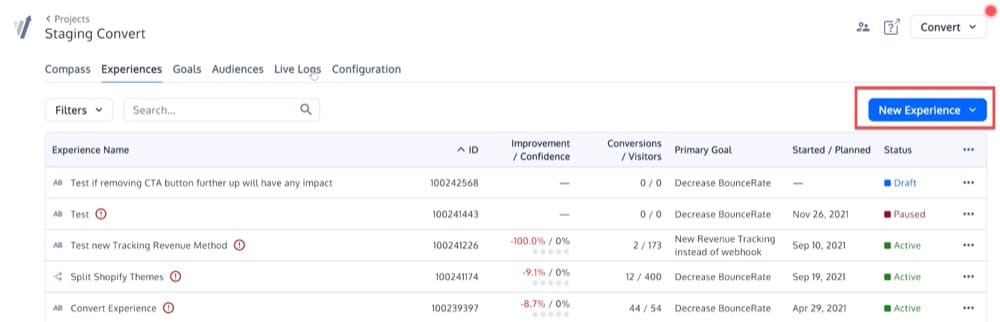

First, from the Experiences tab in your Convert account, select “New Experience”:

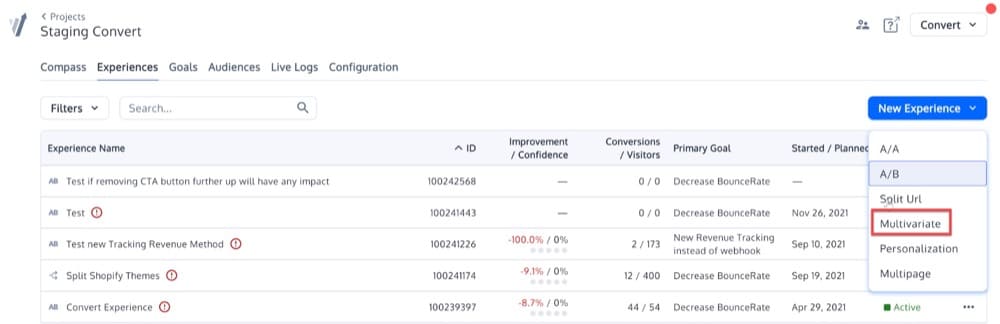

Now you can name your experience. Let’s use “My first MVT”, select the multivariate option, and click continue:

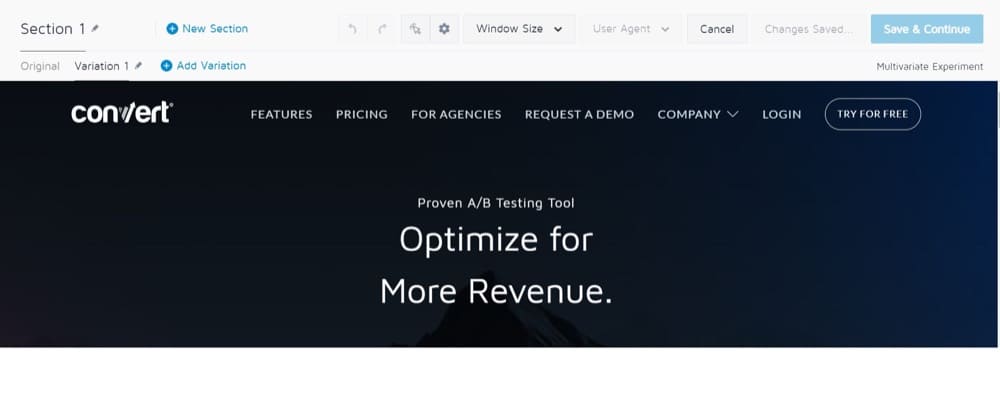

There are sections and variations in an MVT. Sections are the locations on your page where you want to test one or multiple variations.

The following are examples of sections:

- Logo

- Headline

- First Paragraph

- Opt-in form

There are also variations (in these sections), which are structured as follows:

- Section: Logo

- Original logo

- Variation 1) logo left

- Variation 2) logo right

- Section: Headline

- Original headline

- Variation 1) headline “Search Now My Friend”

- Variation 2) headline “Give Search A Go”

- Section: First Paragraph

- Original first paragraph

- Variation 1) first paragraph “red”

- Variation 2) first paragraph “blue”

- Section: Opt-in form

- Original opt-in form

- Variation 1) opt-in form with extra field lastname

- Variation 2) opt-in form with checkbox “whitepaper”

- Variation 3) opt-in form floating left

- Variation 4) opt-in form “woman face”

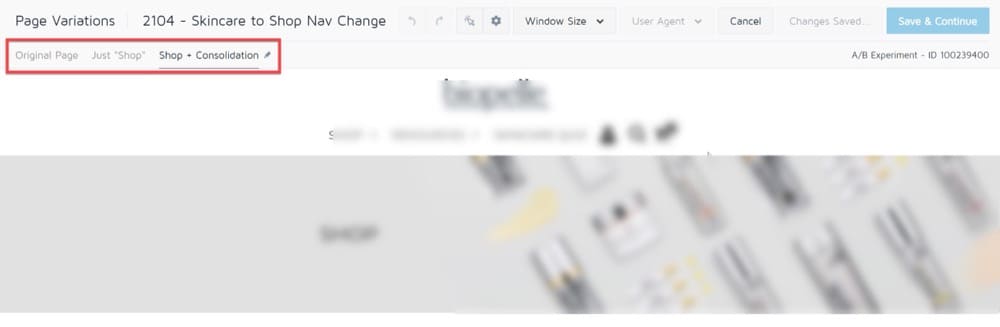

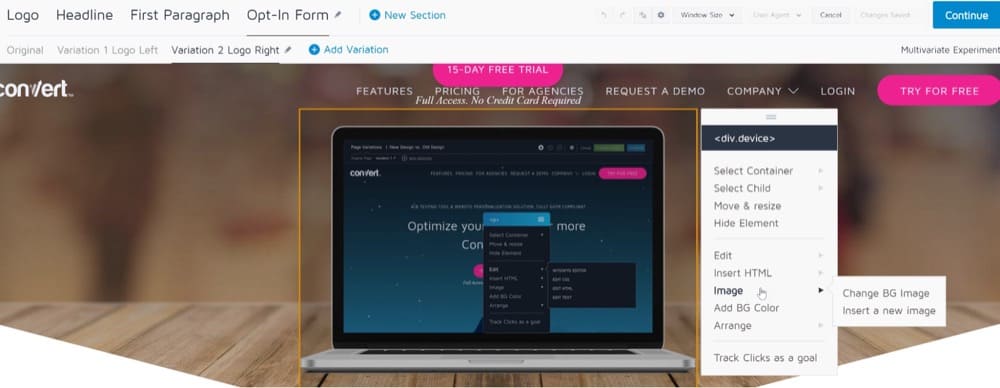

Here is how the above structure will appear in Convert Visual Editor.

The URL for the page you want to test will be loaded in the Visual Editor. After that, you can edit the first variation. Changing content is as simple as clicking on any orange highlighted area. By clicking the green plus sign next to variation names, you can add new variations.

You can, for example:

- Click an element to change (elements are highlighted with orange borders)

- Select an action in the menu, like changing an image source

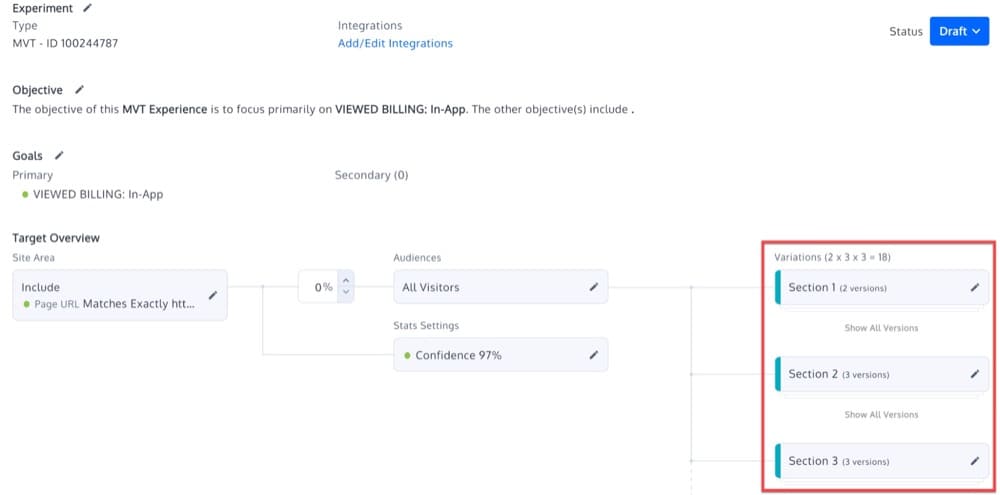

The MVT experience summary will look like this:

MVT, however, comes with a few restrictions.

The first restriction relates to the number of visitors required to make the findings of your multivariate experience statistically significant.

Increasing the number of variables in a multivariate test can result in a lot of variations. As opposed to a standard A/B test, where 50% of traffic is allocated to the original version and 50% to the variation, a multivariate test only allocates 5, 10, or 15% of traffic to each combination. In practice, this results in longer testing periods and the inability to achieve the statistical significance needed for making a decision.

Another restriction is the complexity of MVTs. An A/B test is often easier to set up and analyze than a multivariate test. Even creating a basic multivariate test is time-consuming, and it’s too easy for something to go wrong. It might take a few weeks or even months for a minor flaw in the experience design to show up.

If you don’t have a lot of testing experience — running a variety of different types of tests on different websites — you shouldn’t even consider a multivariate test. You might be better off with the next strategy I’m covering, mutually exclusive experiences.

c. Mutually Exclusive Experiences

You can also run experiences with overlap simultaneously by ensuring they are mutually exclusive. Keep in mind that depending on your A/B testing platform, you may be able to make experiences mutually exclusive. Essentially, you must divide your traffic into as many groups as experiences are being run, and ensure that each group participates in only one experience.

Convert allows for mutual exclusivity, and below we’ll show how to configure it so that visitors who view experience A won’t view experience B.

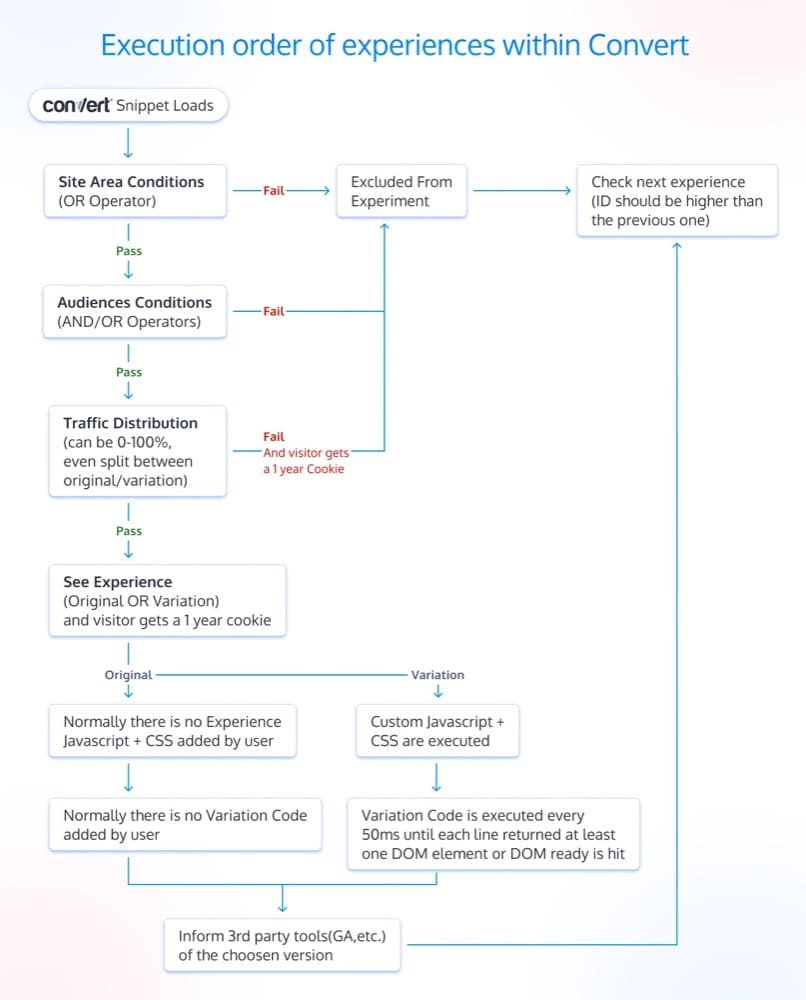

The order in which experiences are executed:

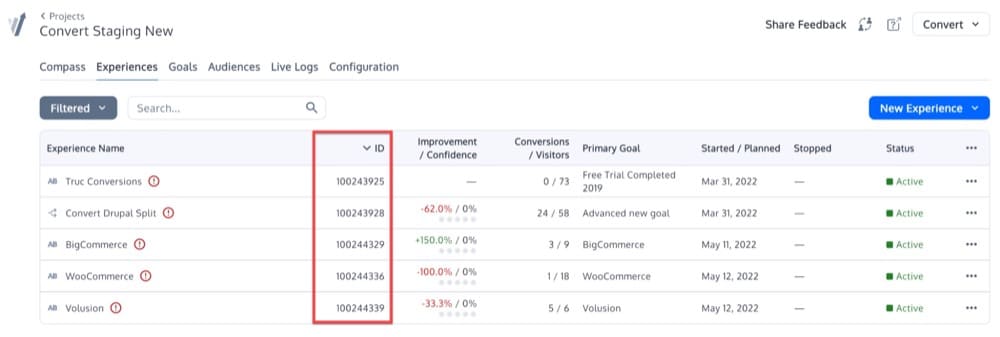

The first step in setting this up is to understand how Convert experiences are executed. Experience conditions get evaluated sequentially on a page, taking into account their experience ID.

The experience with the lowest ID is evaluated first, and after all its conditions are met, a new experience is initiated. So in the screenshot below, the experience with ID 100243925 runs first and the rest follow.

Two Mutually Exclusive Experiences

These steps need to be followed if you have two experiences running simultaneously and want to make them mutually exclusive:

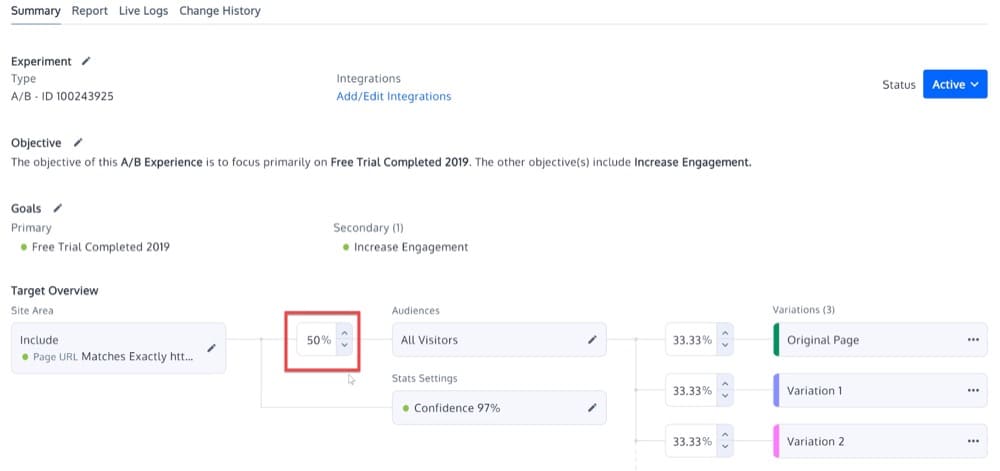

- Set Traffic Distribution to under 100% on the first experience

Set the experience with the lowest ID to use less than 100% of the traffic. You can do this in the Traffic Distribution section of the Experience Summary.

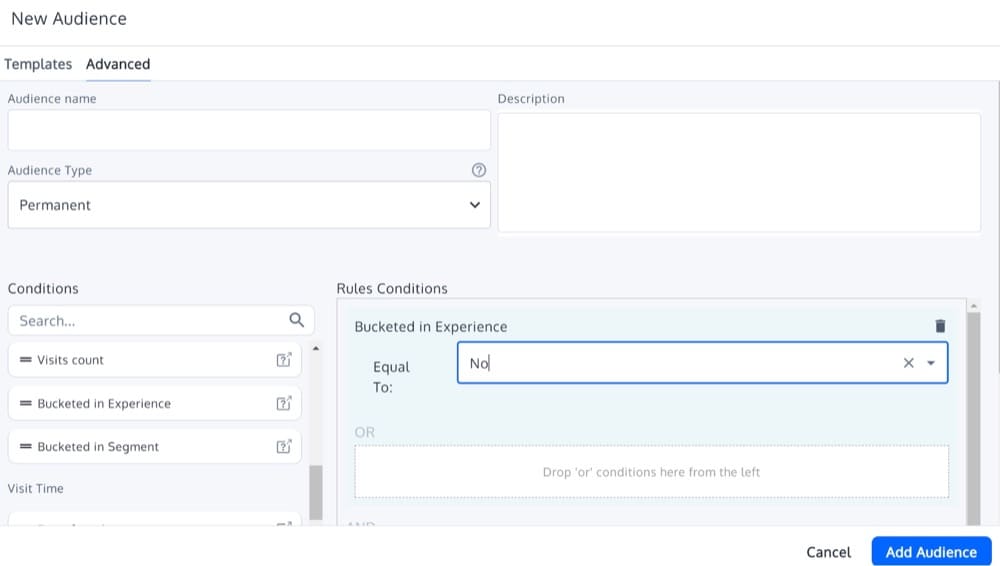

- Set Audience Condition of “Bucketed in Experience is No” on the second experience

Then, in the second experience, set an audience condition of “Bucketed in Experience is No”. You can find this if you add a new Audience (under Visitor Data). This condition means that the visitor will be tested only if they have not been tested before. This will prevent the same visitor from being tested twice.

Many Mutually Exclusive Experiences

If you have more than two experiences that need to be mutually exclusive, you can follow these steps:

- Set Traffic Distribution for all experiences to under 100%

Set all parallel experiences to only use less than 100% of the traffic. You can do this in the Traffic Distribution section of the Experience Summary.

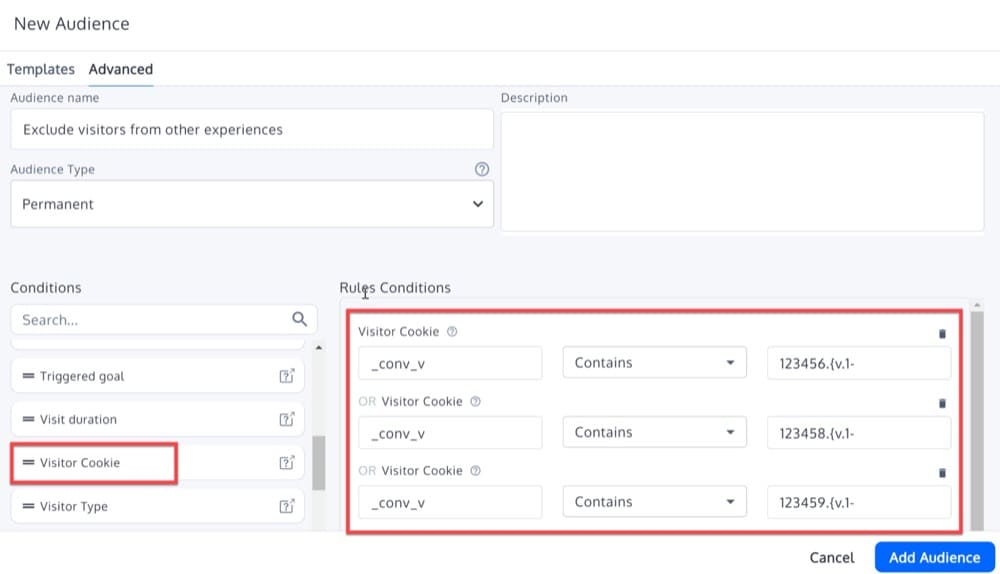

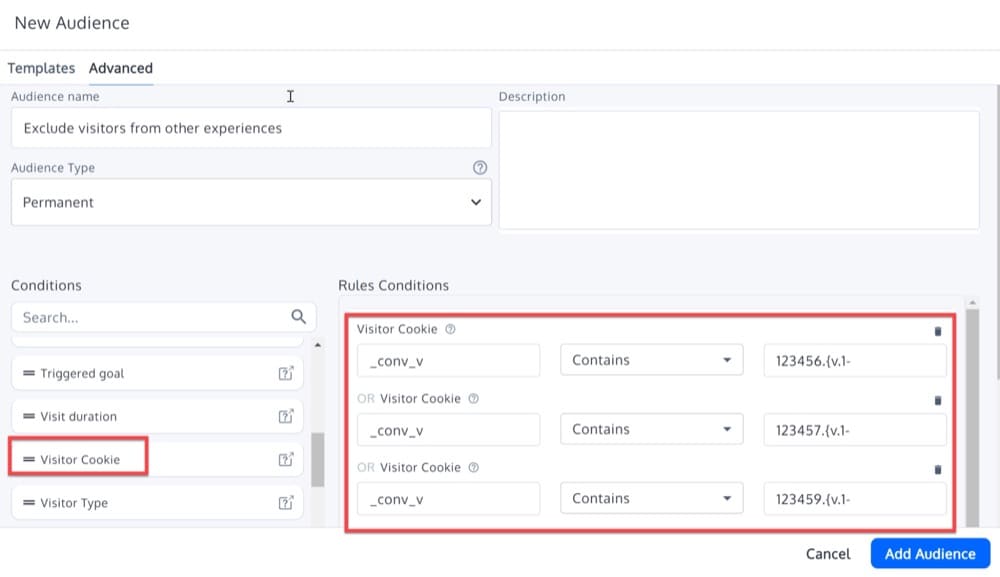

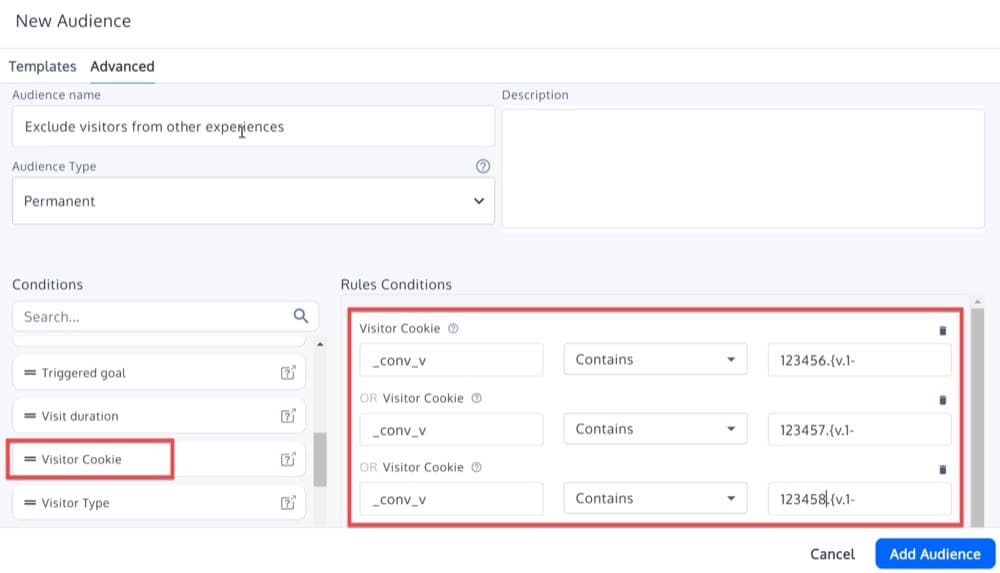

- Set an Advanced Audience based on visitor cookie

Then, in all the experiences except the experience with the lowest ID, use an Advanced Audience based on visitor cookies to exclude visitors that have been included in the other parallel experiences.

For example, let’s assume we have these 4 experiences:

- Experience A with ID 123456, traffic distribution 80%

- Experience B with ID 123457, traffic distribution 50%

- Experience C with ID 123458, traffic distribution 30%

- Experience D with ID 123459, traffic distribution 75%

Experience B should have this advanced audience:

Experience C should have this advanced audience:

And finally, Experience D should have this advanced audience:

As you can see above, the cookie value is formatted as follows:

xxxxxx.{v.1-

This happens because if you are trying to exclude visitors that were included on an experience configured with less than 100% traffic, a cookie is still written if the visitor meets the Site Area and Audience conditions but due to the traffic distribution, the visitor was not included in that experience.

The Convert cookie _conv_v will look similar to this:

exp:{12345678.{v.1-g.{}}}

Note that in the above format there is no variation value – only v.1 – because the visitor was not included in the experience. However, we keep track of this with cookies so that the next time the visitor visits the page they will again be excluded from the same experience.

Conclusion

Having multiple experiences running concurrently introduces some complexities – you’re not always sure which tests increase conversions or whether there are hidden interactions between them. However, this is not a major problem as there are strategies to mitigate these issues.

We discussed 5 strategies for dealing with the problems caused by multiple tests running at the same time:

- Running experiences simultaneously when they have no overlap with each other

- Running experiences sequentially when you cannot avoid the experience overlap

- Running A/B/N experiences

- Running MVT tests

- Running mutually exclusive experiences

We’ve also shown how Convert supports all of the testing strategies above, making it a very versatile tool.

It is important to take into account all these complexities when performing A/B testing, so you can select the most appropriate strategy in every case. We will be more than happy to assist you if you still have any questions.