What Is a Reasoning Engine?

Go even deeper into the world of LLMs, so you can make the most of your conversational copilot.

Shipra Gupta

Imagine if AI could automate routine business tasks like drafting emails, generating campaign briefs, building web pages, researching competitors, analyzing data and summarizing calls. Automating such repetitive tasks can free up an immense amount of valuable human time and effort for more complex and creative activities like business strategy and relationship building.

Automation of such routine business tasks requires simulating human intelligence by making AI function as a reasoning engine. It’s generative AI at another level. In addition to communicating in natural language, AI will also help with problem-solving and decision-making. It will learn from the information provided, evaluate pros and cons, predict outcomes, and make logical decisions. Given the technological advancements of recent times, we are at the precipice of such an AI capability and it has many people in the scientific and business community excited.

What is a reasoning engine?

A reasoning engine is an AI system that mimics human-like decision-making and problem-solving capabilities based on certain rules, data, and logic. There are three types of human reasoning or inference mechanisms reasoning engines tend to emulate:

- Deductive reasoning – makes an inference based on universal and generally accepted facts. For example: “All birds lay eggs. A pigeon is a bird. Therefore, pigeons lay eggs.”

- Inductive reasoning — derives a conclusion from specific instances or samples. This might look like: “Every dog I meet is friendly. Therefore, all dogs are friendly!”

- Abductive reasoning – makes a probable conclusion from incomplete (and often ambiguous) information, such as: “There are torn papers all over the floor and our dog was alone in the apartment. Therefore, the dog must have torn the papers.”

By now, people all over the world know that large language models (LLMs) are special machine learning models that can generate useful new content from the data they are trained on. In addition to that, the LLMs today also exhibit the ability to understand context, draw logical inferences from data, and connect various pieces of information to solve a problem. These characteristics enable an LLM to act as a reasoning engine.

So how does an LLM solve a common business math problem by evaluating information, generating a plan, and applying a known set of rules?

Let’s say a coffee shop owner wants to know how many coffees she needs to sell per month to break even. She charges $3.95 per cup, her monthly fixed costs are $2,500 and her variable costs per unit are $1.40.

The LLM applies a known set of math rules to systematically get the answer:

Step 1

Identify the relevant values.

Step 2

Calculate the contribution margin per coffee. Contribution margin is the selling price minus the variable cost.

= $3.95 – $1.40 = $2.55

Step 3

Apply break even formula. Break-even point is the fixed cost divided by the contribution margin.

= $2500/$2.55 = 980.39

Step 4:

Round up to nearest whole number.

Break-even point = 981 coffees

How to make LLMs function as effective reasoning engines

The popularity of large language models skyrocketed in fall of 2022, but scientists have been deep in the thick of experimenting with these models through various prompts. “Prompting,” or prompt engineering, is now a fast-emerging domain in which a carefully crafted set of input instructions (prompts) are sent to the LLM to generate desired results. When we use prompts to generate a logical plan of steps for accomplishing a goal, we also refer to them as “reasoning strategies.” Let’s explore some of the popular reasoning strategies below:

- Chain-of-Thought (CoT): This is one of the most popular reasoning strategies. This approach mimics human-style decision making by instructing an LLM to break down a complex problem in a sequence of steps. This strategy is also referred to as a “sequential planner.” Chain-of-Thought reasoning can solve math word problems, accomplish commonsense reasoning and can solve tasks that a human can solve with language. One benefit of CoT is that it allows engineers to peek into the process and, if things go wrong, identify what went wrong to fix it.

- Reasoning and Acting (ReAct): This strategy taps into real-world information for reasoning in addition to data the LLM has been trained on. ReAct-based reasoning is touted as more akin to a human-like task solving that involves interactive decision-making and verbal reasoning, leading to better error handling and lower hallucination rates. It synergizes reasoning and action through user action, which increases interpretability and trustworthiness of responses. This strategy is also referred to as a “stepwise planner” because it approaches problem-solving in a step-by-step manner and also seeks user feedback at every step.

- Tree of Thoughts (ToT): This variation of the Chain-of-Thought approach generates multiple thoughts at each intermediate step. Instead of picking just one reasoning path, it explores and evaluates the current status of the environment with each step to actively look ahead or backtrack to make more deliberate decisions. This strategy has been proven to significantly outperform CoT on complex tasks like math games, creative writing exercises, and mini-crossword puzzles. ToT reasoning is deemed to be even closer to a human decision-making paradigm that explores multiple options, weighs pros and cons, and then picks the best one.

- Reasoning via Planning (RAP): This strategy uses LLMs as both the reasoning engine and world model to predict the state of the environment and simulate the long-term impact of actions. It integrates multiple concepts, like exploration of alternative reasoning paths, anticipating future states and rewards, and iteratively refining existing reasoning steps to achieve better reasoning performance. RAP-based reasoning boasts of superior performance over various baselines for tasks that involve planning, math reasoning, and logical inference.

These are just a few of the most promising strategies today. The process of applying these strategies to a real life AI application is an iterative one that entails tweaking and combining various strategies for the most optimal performance.

How can real-life applications use reasoning engines?

It is quite exciting to have LLMs function as reasoning engines, but how do you make it useful in the real world? To draw an analogy with humans, if LLMs are like the brain with reasoning, planning, and decision-making abilities, we still need our hands and legs in order to take action. Cue the “AI agent” — an AI system that contains both reasoning as well as action-taking abilities. Some of the prevalent terms for action-taking are “tools,” “plug-ins,” and “actions.”

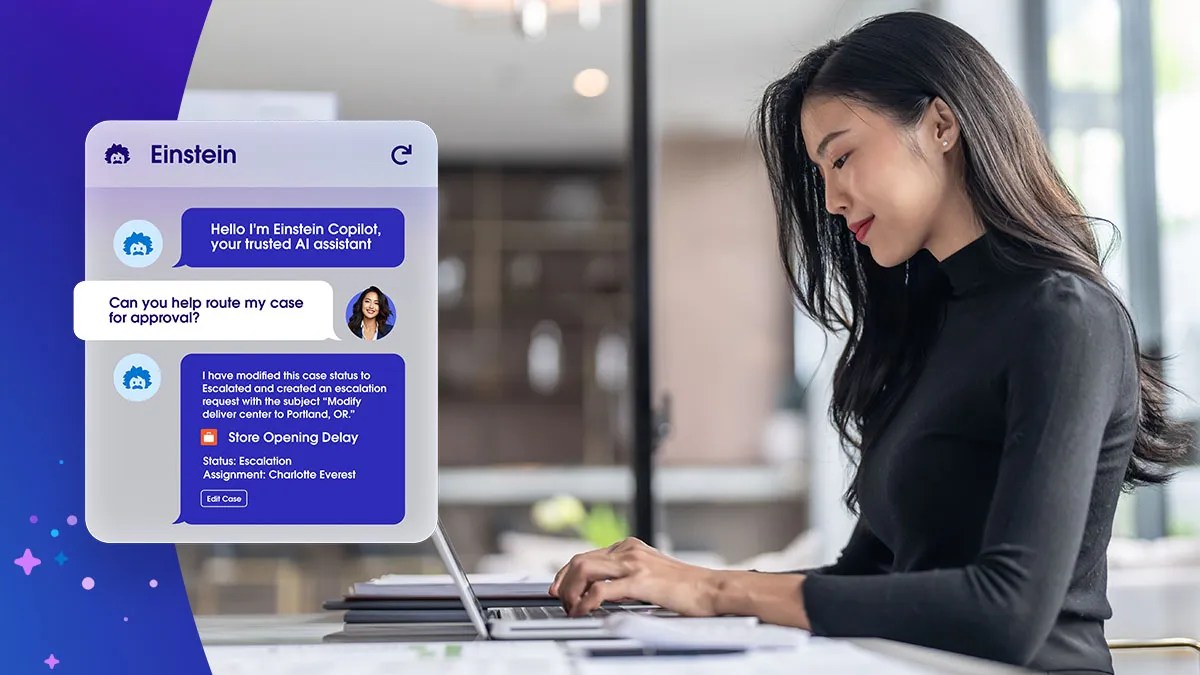

There are two kinds of AI agents: fully autonomous and semi-autonomous. Fully autonomous agents can make decisions autonomously without any human intervention and act on them as well. These kinds of agents are in experimental mode currently. Semi-autonomous agents are those agents that involve a “human in the loop” to trigger requests. We are starting to see the adoption of semi-autonomous agents primarily in AI applications like conversational chatbots, including Einstein Copilot, ChatGPT and Duet AI.

An AI agent has four key components:

- Goal – the primary goal or task of the agent.

- Environment – the contextual information, such as the goal, initial user input, history of previous activities or conversation, grounding data for relevancy, user feedback, and the data that the LLM has been trained on.

- Reasoning – the inbuilt ability of LLM to make observations, plan next actions, and recalibrate to optimize toward the intended goal.

- Action — typically external tools to enable an agent to achieve the goal. Some common examples of actions are information retrieval, search, code generation, code interpretation, and dialog generation.

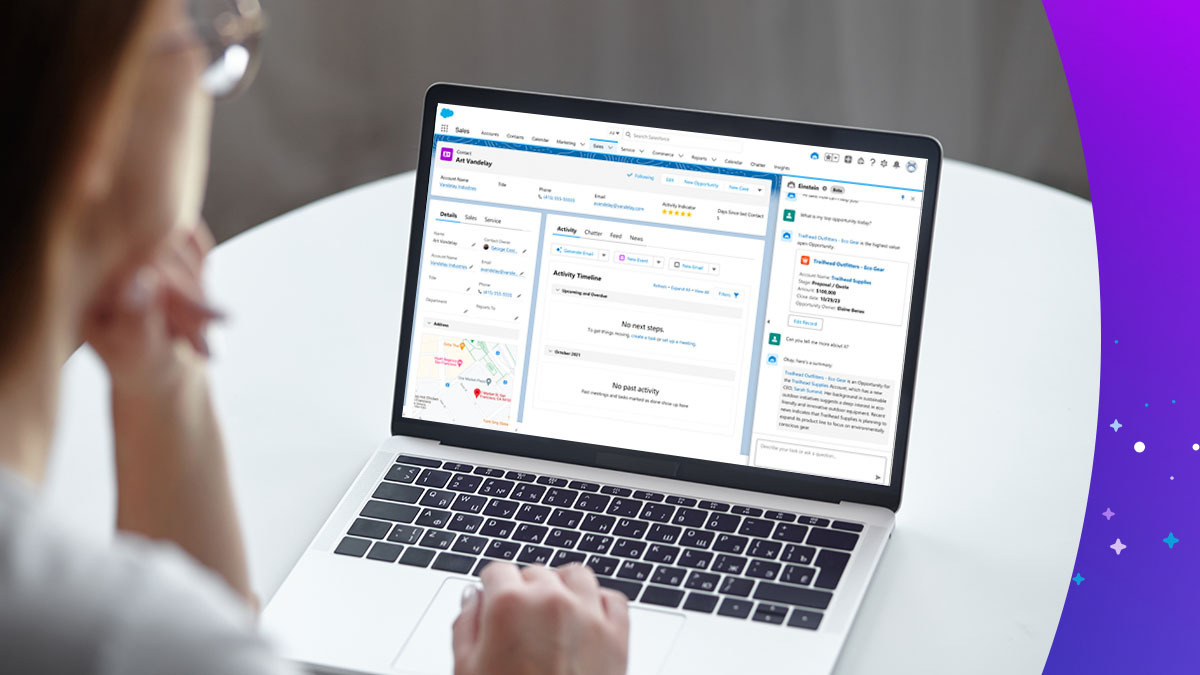

How does Einstein Copilot use LLMs as a reasoning engine?

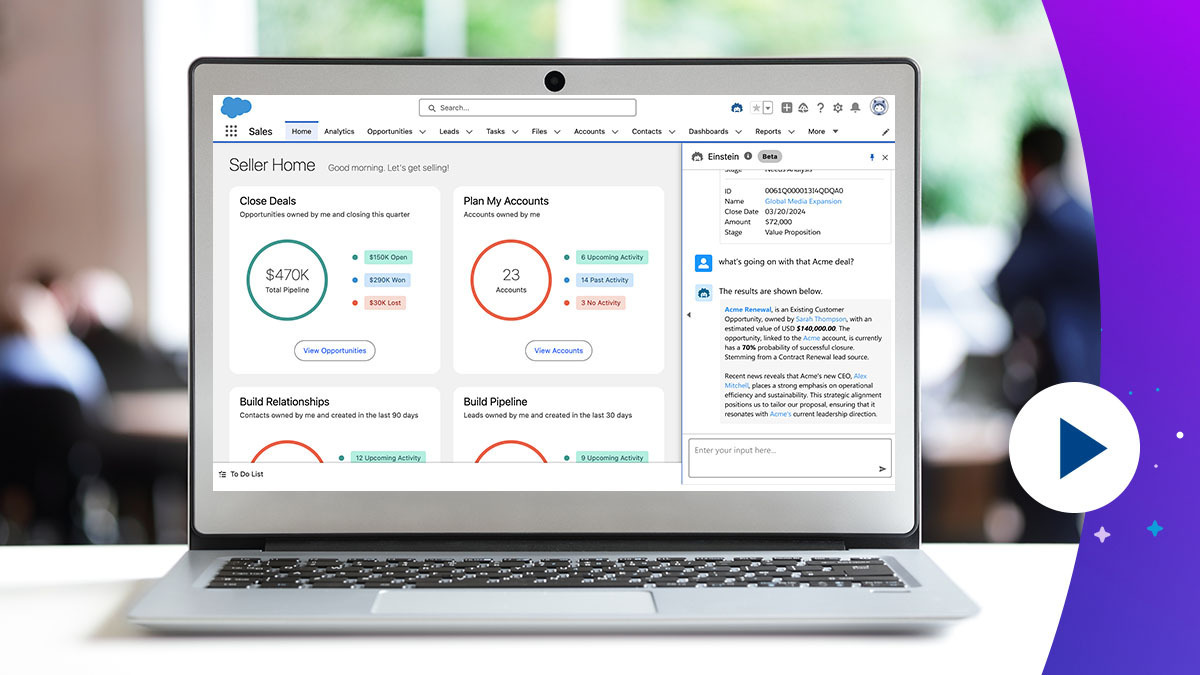

Einstein Copilot is Salesforce’s advanced AI-powered conversational assistant, which interacts with a company’s employees and customers in natural language. Employees can use it to accomplish a variety of tasks in the flow of work, helping to increase productivity at scale. And consumers can use it to chat with brands and get questions answered immediately, leading to higher satisfaction and loyalty. Einstein Copilot uses LLMs for language skills like comprehension and content generation and also as a reasoning engine to plan for complex tasks, thereby reducing the cognitive load on users.

Here’s how it works:

- The user types the goal they want to accomplish, for example: “Build a webpage.”

- Einstein Copilot uses a curated prompt to send the user input to a secure LLM to infer the user’s intent.

- Based on the intent, Einstein Copilot sends another curated prompt to instruct the LLM to generate a plan to fulfill that intent.

- The generated plan is a set of actions chained together in a logical sequence. In order to ensure that Einstein Copilot is acting in a trusted manner, the LLM is instructed to generate plans strictly with actions that are made available to it.

- Once the LLM returns a plan, Einstein Copilot executes the actions in the prescribed sequence to generate a desired outcome and relays that to the user.

Visually, this looks like…

How can your business benefit from Einstein Copilot?

Einstein Copilot gives companies the ability to tap into LLMs as reasoning engines. With this tool, companies can use AI to accomplish a number of tasks that weren’t realistic just a few months ago.

- If a sales team is seeing a thin pipeline, Einstein Copilot can scan databases to find high-quality leads worth engaging.

- Copilot will scan potential deals to find the ones that are at risk and, if asked, can summarize the records for managers.

- Copilot can help service agents resolve an over-billing issue for a customer, gathering the right information for troubleshooting.

- Copilot can analyze the current customer sentiment for a potential deal and recommend the actions needed to close the deal over the next three months.

In these use cases and many others like these, Einstein Copilot is essentially acting as a semi-autonomous agent, using LLMs as reasoning engines and taking actions to fulfill tasks when prompted by users. This is just the beginning; the next frontier is making Einstein Copilot fully autonomous so that it is not only assistive but proactive and omnipresent. AI holds a thrilling future, but even more exciting are the results of global efficiency sure to come.

Say hello to Einstein Copilot

Your trusted conversational AI assistant for CRM gives everyone the power to get work done faster. It’s a total game-changer for your company.