Make Sure Your A/B Tests are Foolproof with Quality Assurance: The Complete Guide to QA-ing Your A/B Tests

A/B tests that don’t function properly don’t convert.

An A/B test can burn through a significant amount of time, money, and resources for optimizers. And, of course, no one wants their hard work to go to waste. This is why you need to perform quality assurance (QA) on websites, landing pages, personalizations, A/B tests, email campaigns, and so on, to ensure everything works as it should.

And the best way to create simple, easy-to-use, intuitive, error-free, and efficient A/B tests is to adopt a proper QA process. This way, visitors receive a valuable, reliable, and highly interactive end-product.

QA is the key to disaster prevention. Its ability to uncover defects and vulnerabilities before they become critical helps optimizers avoid high costs as testing progresses.

While it’s common knowledge that quality assurance is important, many optimizers don’t really take the time to QA their tests properly. As a result, these tests often fail. There would be fewer websites that “didn’t work” if they did.

What Is Quality Assurance?

Let’s get some quick definitions out of the way first.

What Is Quality?

Quality translates into “suited for use or purpose”. In the context of A/B testing functionality, design, reliability, durability, and price, it’s all about meeting the customers’ wants and expectations.

What Is Assurance?

Assurance is simply a positive declaration about an A/B test that instills confidence. It’s the certainty that an A/B test will work properly and according to expectations or requirements.

Quality Assurance in A/B Testing

Simply put, quality assurance is a technique to ascertain an A/B test’s quality. It brings optimizers and developers together to ensure everything looks and works right on all relevant devices and browsers.

In other words, a website (or landing page, variation, email campaign, and so on) needs to be of high quality to avoid exposing visitors to friction and other pain points.

User Testing vs. Quality Assurance

User testing looks at how a user actually experiences a website, whereas quality assurance focuses more on the actual design of the website.

User testing:

- Examines how real people use a website and how this differs from what the developers intended

- Spot potential visitor misunderstandings and friction points in a real-world usage environment.

Quality assurance:

- Surfaces errors and broken elements on the website

- Improves site performance

So, they’re different, with QA being a much broader term.

Why Is QA Important in A/B Testing?

Sure, a few bugs here and there are irritating.

But, at the end of the day, do they impact the bottom line? Is QA worth investing more resources into? What difference does it make if things aren’t perfect?

Although we’ve overcome considerable hurdles in quality assurance, many organizations continue to undervalue it.

Let’s find out the biggest risks affecting QA and why it plays such a big role in A/B testing.

Optimizers and Developers Are Too Close to A/B Testing

Optimizers and developers usually work together to set up A/B tests. They’re so close that it’s easy to overlook minor but crucial aspects when they’re in charge of quality assurance.

However, QA testers ensure that attention is paid to quality. They perform non-obvious functions that propel an application in “unexpected ways,” often never intended. QA testers refuse to accept that “bugs have been fixed” unless they have proof. They’re not afraid to try new things and fail. If they don’t find a bug the first time, they get more creative with the testing and align it to expected standards.

A second pair of eyes on A/B testing and its design can uncover unexpected flaws.

Emulators Are Not the Real Deal

End users don’t use an emulator; instead, an actual device with a browser. It’s entirely possible to miss out on browser-specific issues if you don’t investigate them on real devices or browsers.

We’ve seen many support cases where users are quite confident their A/B experiment is working fine on BrowserStack (simulating how an A/B test looks on different browsers). But when launching the test for real visitors, they reach out to our support agents to report A/B test malfunction.

Real support case:

Having an issue with a conversion on my project.

Click_Cart_Checkout_1 won’t seem to fire on iPhone XR/Safari. I’ve checked dev tools console on both (chrome://inspect and linking an iPhone to a macbook), and I can see the code executing for that conversion, but it isn’t tracking in the reporting.

The conversion is tracked when using XR to emulate an iPhone in devtools but not in real world testing.

We have tested on two separate iPhone’s. Our QA tester and mine.

You may not be able to test if your metrics configuration is correct unless you perform manual QA across browsers. After a few weeks, you might even check your results and discover that your primary action isn’t triggering correctly or at all on some browsers!

So make sure you QA your tests in different environments, not just in emulators.

Interactions

You can also miss bugs linked to page or variation interactions if you don’t examine the variations manually. This can lead to simple problems such as difficulty opening an accordion, pressing a button, or something significant that affects the entire funnel.

Real support case:

On the variation page, we have problems with the CheckOut button and sometimes facing issues with the PayPal button, though on the original page all working well.

When I click the checkout button, it redirects me here: cart.php/checkout.php but should be /checkout.php

We also have problems with the Logo; sometimes it is showing, sometimes not.

A/B testing isn’t only about adding new elements to page variations and how these elements interact with each other and how they enhance overall site functionality.

Breakpoints and Changing the Device Display Mode

If you use emulators, you might overlook issues with changing the device mode from portrait to landscape or vice versa. You can quickly spot-check if the variation is displayed correctly in both modes and if the user is switching between the two by quality assuring the variations on real mobile or tablet devices.

Real support case:

We were running a multivariate test on our project, and the client noticed that the images were showing incorrectly on mobile landscape mode. Can I get your help figuring out why?

Tests From a Human Perspective

Only manual QA performed by a human can tell when something seems “odd”. Automated test methods don’t pick up these visual flaws. A QA engineer can find usability issues and user interface (UI) glitches by interacting with a website as if they were a user. Automated test scripts cannot test these.

What Happens If You Don’t QA A/B Tests?

Now that it’s clear that QA can mean the difference between business success or failure, let’s see what happens if you ignore it (hint: it’s not pretty).

Delays in Launching A/B Tests

To ensure an A/B test’s success, it’s crucial to set aside enough time for quality assurance beforehand. This includes ensuring all web or app elements work correctly and meet business and customer needs. QA helps identify and fix issues before you start testing to avoid launch delays.

Errors in Report Results

Without QA, variation errors crop up and can cause statistical errors in the report results.

A false-positive result (also known as a Type I error) occurs when the test results show a significant difference between the original and the variation when there is none. Another mistake is a false negative (aka Type II error) when the results show no significant difference between variation and original, but there is one.

Without proper QA, you may not be able to determine your A/B test results accurately. This can lead to incorrect conclusions about your business’ performance and potential optimizations. What other statistics does a lack of QA affect?

Poor Compatibility

Your visitors access your services from various locations and browsers.

Compatibility with devices and languages you should think about and test, if possible. It’s your responsibility to ensure that your application works in different browsers, especially in all versions.

Customer Frustration and Reputation Loss

Did you know that dissatisfied consumers are more likely to leave a public review than satisfied customers?

Online and offline complaints, as well as product abandonment, are bad news for your business. Because of the negative information, other potential buyers are hesitant to purchase the product. A few disgruntled (and outspoken) customers can mean lower confidence in your brand. If consumers post a negative review online, their dissatisfaction may affect future purchases.

A Lack of Trust in the A/B Testing Tool

The only reason brands invest in an A/B testing solution in the first place is to make informed decisions and provide their visitors with the best possible online experience. You can’t count on your optimization efforts to translate into meaningful benefits if you can’t trust your A/B testing solution.

By ensuring that all A/B tests are quality-assured, you’ll know that data is appropriately collected and your optimization efforts deliver real benefits to your visitors.

Technical issues in A/B testing are critical because they pose significant risks for brands, including data loss, distorted reporting, and biased decision-making. They also have serious financial consequences. Brands need to fully trust their solution as it’s a decision tool that directly impacts increasing conversion rates and sales and revenue.

Business Suffers From Lost Sales

You only get one chance to make a first impression. It’s true in many ways, and if you don’t take the time to QA, you may be leaving money on the table.

Seemingly small things like preventing checkout progress or not showing CTAs can cause your customers to abandon your store. You need to test all of this to enrich the customer experience.

The key to customer success and customer happiness is delivering a high-quality product. Satisfied customers return and may even refer others.

4 Best Practices to QA A/B Tests

If you’ve read to this point, it means you understand the value of quality assurance and want to learn more about it. But what parts of an A/B test can you QA? And what are the best practices to follow?

Here are a few ways to get you started.

1. Develop a QA Strategy

Before you QA your A/B tests, create a strategy or establish a structured process. It should have a clear objective and specific quality standards to measure the QA against.

Also, discuss the pain points with your development and optimization teams. Bring everyone involved on the same page by considering different perspectives and setting shared goals and standards.

Your QA strategy should also align with your conversion goals. If the time and resources you spend on quality assurance aren’t ultimately contributing to conversions, you may need to rethink the whole process.

A small list that wants exactly what you’re offering is better than a bigger list that isn’t committed.

S. Ramsay Leimenstoll, Investment Advisor and Financial Planner at Bell Investment

What do you typically need to build a handy strategy?

- Laying the groundwork and assigning roles

- Knowing exactly what to QA

- Forecasting problems that may arise and ways to fix them

- Listing variations

- Identifying who the QA is intended for (target user group)

- Setting standards for QA

2. Identify what to QA in an A/B Test

Assuring an A/B test’s quality can be quite tedious and complex if you don’t know what and how to prepare for a QA. Here are some of the most critical aspects to consider.

On-page Elements

On-page elements are the meat of an A/B test. Because they’re customer-facing and directly affect page speed and user experience, you need to constantly test them for quality. Before you begin, prepare a list of all the elements to QA. This should be a larger part of your QA strategy to conduct successful audits, reduce the turnaround time, and identify and fix glaring site weaknesses and issues.

Some critical areas you should focus on:

- Page speed and responsiveness: These are easily the most essential and overlooked in a QA.Many dynamic site factors influence page performance and responsiveness. And while you may evaluate these factors for individual functionalities, looking at whether they make your site slow and how can mean all the difference. You need to create a bigger picture and explore all possibilities of a responsive design, keeping both your site performance and the customer experience in mind.

- Device type: Screen resolutions differ based on the device a page is accessed from. This is extremely important when testing for a mobile audience where resolutions heavily impact user behavior. To enable a quality experience, you need to identify what device your consumers use. Track patterns and QA your test elements (for those devices) accordingly.

- Graphics and visuals: All graphic and visual page elements should undergo QA, affecting page performance and load time. Ideally, you need to understand how to use images in your A/B tests. Any image you use should sync with your site structure and design and load properly on different devices.

- Basically everything: Are the on-page forms working fine? Did any change to them affect site quality? What does the old vs. new look like? We can discuss hundreds of elements to QA on a site but still miss the point. This is why you should know every little detail that needs validation and testing. And how do you do that? By constantly checking and assuring the results your changes bring.

Integrations with Third-Party Tools

If you’ve set up a Google Analytics integration (or any other third-party interface, such as Google Analytics, Mixpanel, or Kissmetrics) to send variation-specific data between platforms, make sure the data flows smoothly and accurately.

Flashing or Flickering

Remove any flickers before making the test live.

Using an asynchronous code snippet on your site, you might see the original before the variation loads. To give your visitors a smooth site experience, switch to a “synchronous website”. For the short loading time, the original is hidden, and your visitor only sees the variation

Cross-Browser and Cross-Device

One of the most common issues with A/B testing is “browser and device compatibility”. Examine how the variation appears in the most popular browsers, including Chrome, Safari, Firefox, Edge, and device types, desktop, tablet, and mobile.

Just because your site is responsive doesn’t mean it’ll display and work correctly on all devices. You still need to QA keeping mobile-specific issues in mind. Remember that a good “desktop experience” is not the same as a good “mobile experience”.

You must still carry out QA. You should also consider mobile-specific issues. Remember that a good desktop experience is not the same as a good mobile experience.

Talia Wolf, Conversion Optimization Consultant at GetUplift, summarizes the cross-device problems pretty well and offers some possible solutions:

Talia’s take on device usability sheds light on more than just the usual aspects of the device experience, essentially focusing on areas that enable easy navigation and simple handling. You need to go above and beyond and consider existing and future device capabilities to deliver the right quality.

3. Focus on Page Experience

What are you making all the efforts for if they don’t ultimately improve the page experience? A page’s functionality significantly impacts page experience and should be QA’d promptly.

- User interaction: Start by analyzing how a user interacts with a page. List different interaction touchpoints and categorize them based on their technicality. Make sure you check the smallest detail. Is everything clickable and properly redirectable? What does the navigation hover look like? Are there any page glitches or bounce triggers?

- Page load time: Before you run an A/B test and right after you start it, check your page load time. Any difference in page load time is usually negligible but recheck the variation changes if you see a significant increase. This can be due to changes you made to the variation taking a long time to load or the tracking code snippet’s speed.

It’s worth noting that load time is longer than usual when performing QA with a testing tool – an inaccurate representation of actual page load time.

Tip: Convert’s tracking script adds about 450ms of additional load time to reach the first page of the websites it’s installed on. It catches subsequent loads at either browser level or CDN edge level.

Additionally, previewing the test in different variations guarantees it’s loading properly and producing the expected results.

4. Align QA with Your Conversion Goals

A/B testing is the precursor of conversions. If your tests don’t eventually align with your conversion and A/B test goals, you’re in for the long haul and run the risk of exhausting existing resources.

The best design isn’t the one that just makes your company look cool and edgy and sophisticated. It’s the design that supports conversion, has room for cool copy, and power calls-to-action that make people click the big orange button.

Brian Massey, Conversion Scientist at Conversion Sciences

If Brian’s words are anything to go by, all the elements previously discussed shouldn’t only move your visitors down the conversion funnel but lead to conversions. You can only test and try and test again for as long as you have the time, people, and budget. This calls for optimizing all these areas in a way that impacts your bottom line and keeps your business running.

Here’s a use case:

If you run CSS selectors in your console, you can see if the elements produced are what you’re looking for. If anything deviates, check if the regular expressions weren’t narrow enough. To be sure, take a handful of website URLs and run them with a tool like RegEx Pal. This applies to both running your tests and tracking conversions. You can further check if you’re running your test on pages that aren’t designed for it?

This all might seem like a lot, but the process should be similar to the extensive testing you’d do when releasing a new website/app, which can take days and a lot of effort.

Why QA Isn’t Done Properly

The following factors contribute to your A/B tests not being adequately QA’d.

Time Constraints

Development teams are under constant pressure to maintain a high split testing pace to achieve faster growth. However, A/B tests are more likely to be implemented incorrectly under such a load.

Wrongly Implemented Tracking Codes

This is a simple mistake to make, but it’s one that can have huge consequences. When you implement tracking codes incorrectly or forget about them altogether – the data from an A/B test becomes useless because there will be no way to know which variant was better at converting visitors into leads and customers.

No Clear Hypotheses

New hypotheses are tested against your original page in A/B tests – nothing more, nothing less. And they need to be adequately delineated and defined.

For example, you might want to see if including a quote from a CEO increases or decreases a landing page’s conversion rate. The null hypothesis states that the integrated quote has no meaningful impact on the conversion rate. You can develop a directed hypothesis: Including the quote results in a much higher conversion rate.

Is your hypothesis properly formulated? Get big conversion lifts with Convert’s hypothesis generator.

Inadequate Sample Size

This can be the case for small online stores and websites where the tests aren’t useful or appropriate. Because of the small sample size, the results are prone to severe volatility and distortions.

The “smaller” the sample, the “greater” the likelihood of estimation inaccuracy.

Excessively large samples can also be a concern. Even minor mean differences become significant in large samples when using interval-scaled data, such as measuring the length of stay. Calculating the effect size and the ideal sample size can help you solve this challenge.

Tip: Use our online A/B testing significance calculator to plan your tests.

How Convert Overcomes Typical QA Challenges

Any company introducing experimentation into their digital marketing strategy should incorporate QA measures into their operational workflows, such as certifying integrations and previewing experiences before going live.

At Convert, we integrate the many pieces of a puzzle to create a personalized strategy in the simplest possible way. We’ve helped hundreds of businesses improve their ability to build, test, review, and launch experiences with our diverse tools and resources.

Let us show you what they are and how they can be a well-rounded solution to all your QA problems.

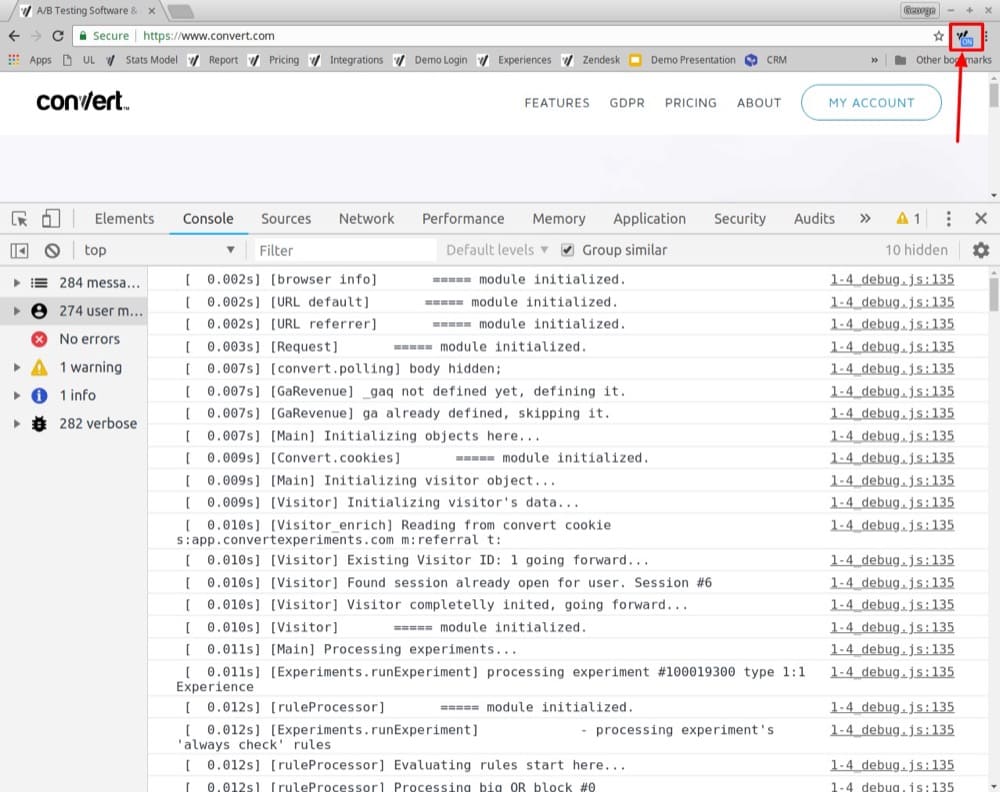

The Google Chrome Convert Debugger

Our Google Chrome extension outputs “Convert script logs” to the Chrome Developer Tools console. It includes experiments and certain triggered variations. This tool empowers you to determine the sequence of testing events and provides valuable information when debugging a test.

Remember: Use this debugger throughout the QA process to decide whether you’re bucketed into the experiment and observe variations.

Learn more about installing Chrome Debugger Extension For Convert Experiences.

It’s common to get bucketed with the original variation and mistakenly assume that the experiment isn’t working. We recommend enabling the extension and performing the QA in incognito sessions to fix this issue.All variation and experiment references in the debugger output are made with the corresponding IDs.

Find out how to determine experiment and variation IDs.

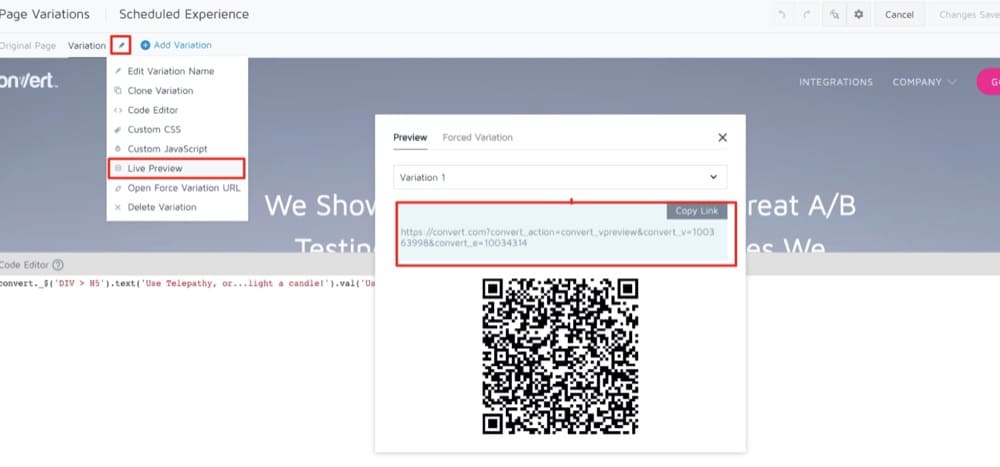

Preview Variation URLs

These URLs support developing or drafting an experience with the Visual Editor. While operating, the experiment QA tester should go back and forth between these URLs and the Visual Editor view. The view may not be entirely accurate as the editing window has only one frame. Also, the code that makes changes in the editor runs repeatedly for a better user experience.

Keep a few things in mind while previewing variation URLs:

- Site Area and Audience conditions aren’t considered when previewing with the” Live Preview URL”.

- The changes are made to the page URL where parameters append. This is for easy testing of different views.

Previewing a campaign and conducting the QA process are quite different.

Previewing a campaign doesn’t replace a real QA process, as the “Preview mode” forces changes to be displayed to generate a preview and doesn’t allow you to check click tracking or targeting.

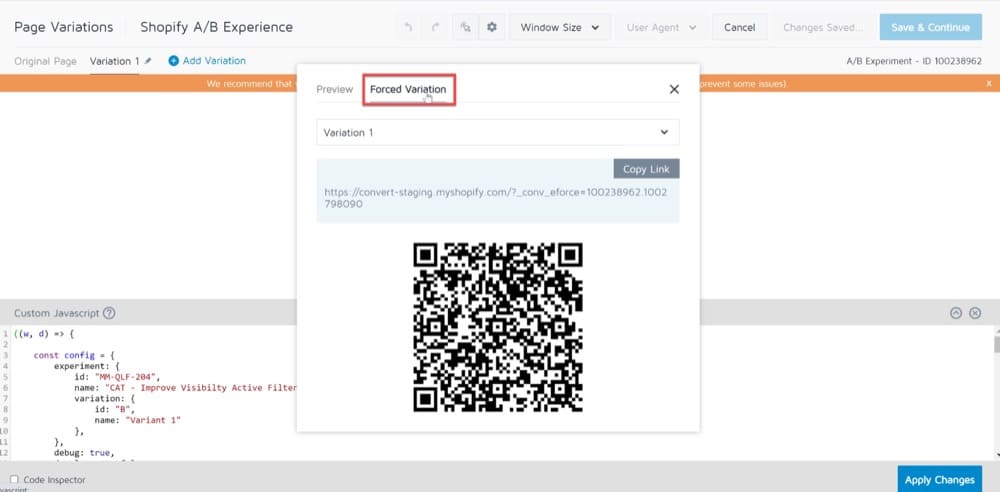

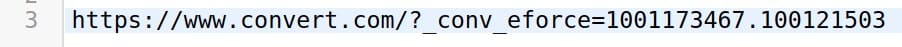

Force Variation URLs

Force variation URLs offer you an unambiguous test outlook. With these, you test your experiment in an environment similar to a visitor’s.

Remember: You should use these URLs for the final testing of your experiment.

Variations served with this URL are served from the CDN server used to serve the experiments.

When using a Force Variation URL, the test conditions are evaluated, so make sure they match. Also, remember that goal configurations often include URL conditions. This could be the reason your targets don’t fire with a Force Variation URL.

Struggling to format variation URLs? Here are some useful tips.

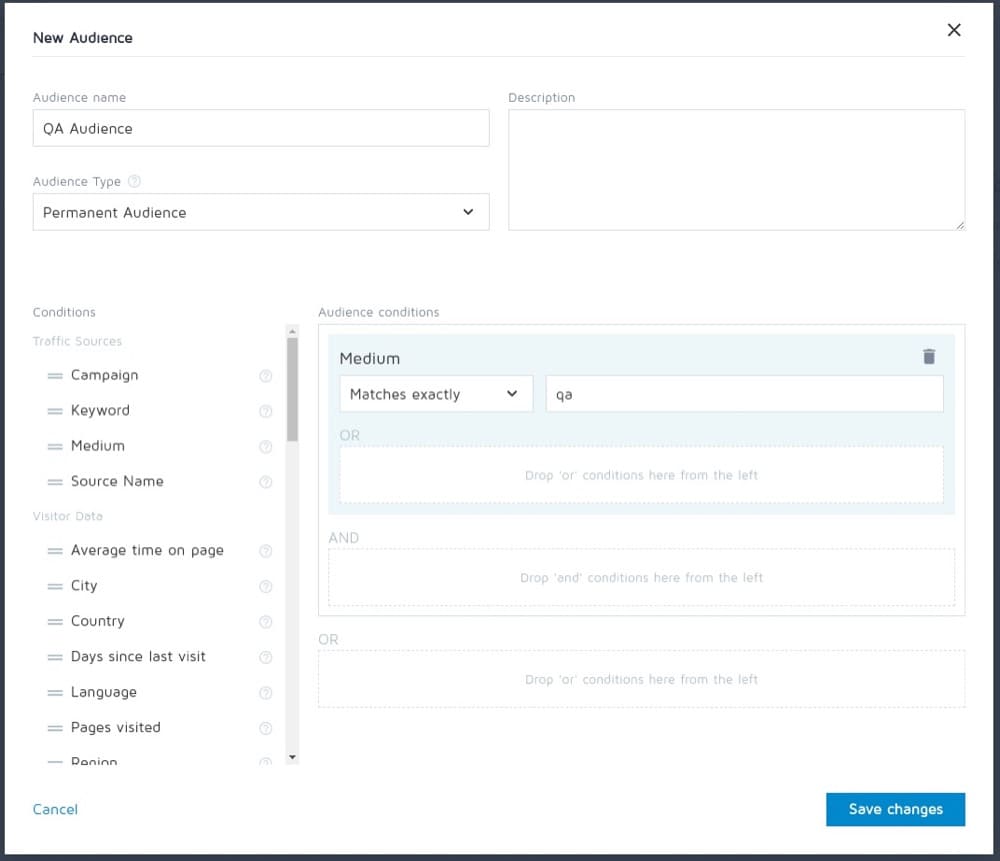

Using Query Parameters in QA Audiences

You can limit the visitors who see your experiment by adding a QA audience to it.

If you add QA query parameters to the experiment URL, you can further group this audience.

https://www.domain.com/mypage.html?utm_medium=qa

To create a “New Audience,” you can use a condition like:

Medium: Matches exactly qa

QA audience lets you “Activate” and verify a test without bundling visitors into it.

- First, assign a target group to your experiment.

- Open a new incognito window in your browser to test.

- Don’t open a second tab, and make sure no other incognito windows are open.

- Close the window when you’re done with the current test, and open a new window for each new test.

Before visiting the site, add the following to the end of your URL:

?utm_medium=qa (replace qa with the value you chose when you created the Audience).

You mostly visit the URL that matches the “Site Area” conditions. Depending on your experiment’s structure, in some cases, you may first visit a different page and then navigate to the Site Area that triggers the experiment to run. In either case, make sure to add the query parameter to the very first URL you visit. This parameter is saved even if you navigate to other pages.

For example, if the URL is “http://www.mysite.com,” you should visit:

http://www.mysite.com?utm_medium=qa

If you follow the instructions above, you can see every variation of the experiment as it’s chosen randomly. Furthermore, if you didn’t already start with the URL that matches the Site Area conditions, you can visit the one that does. You should then see either the “Original” or one of the variations, depending on what the experiment randomly selects.

Remember: adding a QA audience may affect your ability to match Site Area and destination URL conditions.

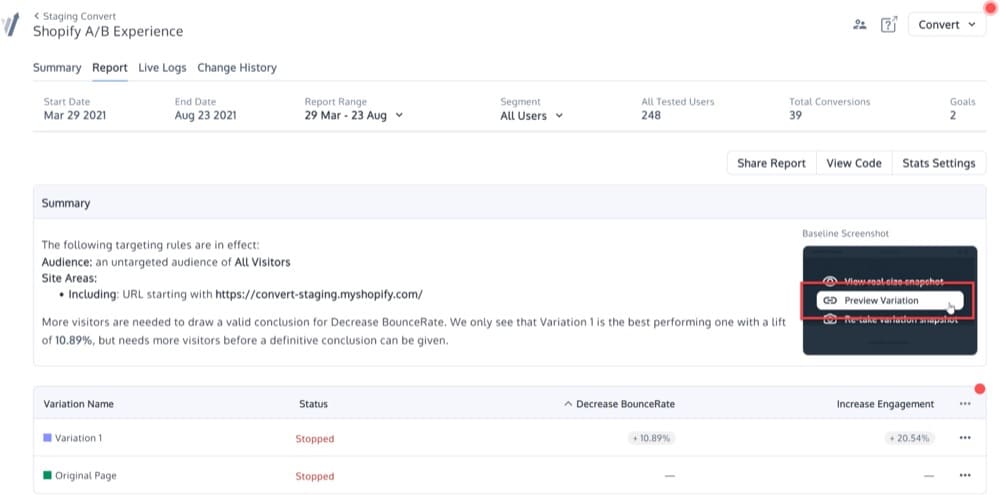

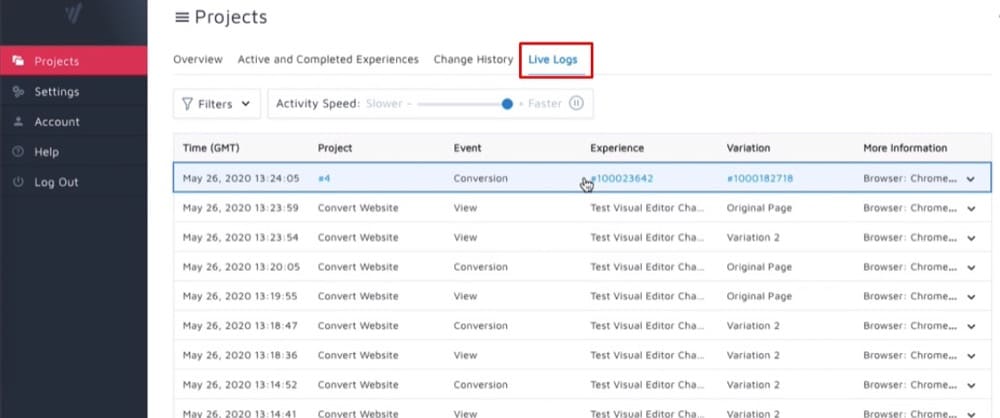

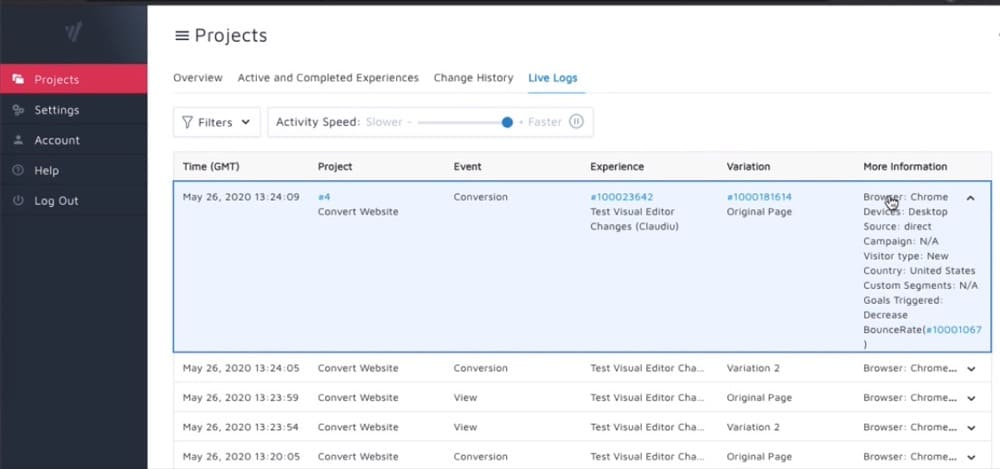

Live Logs

Live Logs in Convert Experiences track how end-users interact with web pages and experiments at the project and experiment level in real time. They capture information like the timestamp when a target fires, the type of event fired, the variation presented to the end-user, and more.

To view live logs for a specific project, you can navigate to Projects > Live Logs on the top panel.

Here, you can click anywhere in the row to see more details of the activity, such as the experiment name, browser type, device used to access the experiment, the user’s country, goals triggered during a visit, and so on.

With Live Logs, you can track and monitor how conversions occur. They also make it easy for you to validate your setup and debug any issues found during test setup or conversions. Live Logs also act as a source to help you track revenue and validate the experiment and the goals set up.

Look Ahead, Always!

A/B QA testing is essential to ensure your website is fully functional and error-free. QA not only helps solve current problems but also lays the groundwork for preventing future problems.

However, putting together a QA process might be difficult. With so many potential users on many devices with different goals and ambitions, it’s difficult to know where to start and what questions to ask.

We polled the conversion rate optimization community about the importance of QA.

The response was unequivocal.

The QA procedure is important to the success of an experiment, according to 100% of respondents.

We’ve combined industry-wide QA best practices with insights from Convert’s Certified Agency Partners to build a comprehensive checklist you’ll use again and over again.

The checklist above isn’t intended to be an entire list of everything you should check but rather a starting point for thinking about what needs to be done before launching your A/B tests. It’s important to remember that QA is a journey, not a goal and that it should be done regularly and throughout major changes.