The 2018 SMX Advanced has come and gone. We share some of our main takeaways. Much of the focus at the 2018 SMX Advanced conference was on subjects that have been on the community’s radar for awhile, such as machine learning and AI, the mobile-first index, voice agents, and voice search, but as is often the case, there was value to weave those disparate SEO threads together. Below is a breakdown of some of the key SEO and digital marketing messages we took away.

Google voice search ranking factors are quantified and public

Google does yet provide direct data about voice search queries available, but the on and off-page elements that it takes into account when deciding which results to provide for a voice query are known. Additionally, there are other horizontal areas that can be leveraged to get insights on what the target audience is asking questions about: Long-tail keyword research, social listening, internal site search, user research, CS feedback, chatbot logs. One of the biggest revelations at SMX Advanced, however, was that the actual mechanics through which Google decides what to provide as the result for a voice search is not just known, it’s public. In her presentation as part of the panel “Optimizing Content for Voice Search and Virtual Assistants,” Upasna Gautam of Ziff Davis recapped a case study published by Google titled “Google Search by Voice: A case study.” In this study, Google walks through the parameters it uses to evaluate and present search results via Voice.

What are Google’s voice search ranking factors?

Below is a summary of the five main categories of Google’s voice search ranking as described in the Google Search by Voice case study:

- Word Error Rate (WER): Google measures grammatical and spelling errors at the word level and counts them against the recognizer. This was broken down into an equation: WER = # of word substitutions + insertions + deletions / Total # of words

- Semantic Quality or “WebScore”: This is the overwhelming ranking factor for appearing as a voice search result. WebScore is calculated by measuring how many times the search result as queried by the recognition hypothesis varies from the search result queried by a human. Basically: WebScore = # of correct search results + word deletions (e.g. dog parks [in] sf) / Total # of spoken queries

- Perplexity (PPL): The size of the set of words that can be recognized next in a sequence, given previously recognized words in query. Lower PPL = better chance of model predicting the next word in the query

- Out-of-Vocabulary Rate (OOV): % of words spoken by user not modeled by the language model. Basically, if your target audience is using words that aren’t in the vocabulary of your content, it will result in a recognition error, which may also cause errors in surrounding words as the predictive model breaks down in a cascade effect

- Latency: Total time in secs it takes to complete a voice search, measured as the end of the user’s sentence all the way to the search results appearing on screen. (Yet another reason why site speed is supreme)

You can read this Google voice case study right here.

Machine Learning and SEO

The connections between machine learning and SEO are now undeniable. On the search engine side, technologies like RankBrain are integrating machine learning into the search algorithm with the ultimate goal of getting the search engine spiders to crawl websites the way an actual human end user would read and navigate. On the SEO technology side, machine learning is being integrated into the way SEOs discover opportunities, optimize content, and more. In her presentation as part of the panel on voice search, Benu Aggarwal, founder and president of digital marketing agency Milestone, expounded on the concept of AI-first digital marketing. In this model, according to Aggarwal, AI-driven technology like customer service bots, marketing bots, and product bots could all be harnessed not just to direct human users to the content or services they need, but to gather usage data and user queries to better guide the development of the product or website. This was also a theme that BrightEdge covered in its Tuesday session, Accelerating Enterprise SEO Performance: The Dynamic SERPs and Artificial Intelligence.

The truth behind the mobile-first index

As we all know, 2018 is the year of the mobile-first index. That said, there's still a degree of uncertainty in the community over just what that entails. Various speakers provided some much-needed level setting about mobile-first. The best walkthrough was by Alexis Sanders, technical SEO manager at Merkle. In her presentation, Google is Adapting to the Worldwide Shift to Mobile, Sanders summarized the mobile-first index as follows:

- The mobile-first index is about prioritizing mobile

- It's not a separate index/database storing

As such, this is a change that largely only affects websites that still present different experiences on mobile than on desktop, be it through a "m." subdomain or a subdirectory. Sites that are already using responsive design should be good to go, though site speed on mobile is still a major concern, especially since, as Sanders pointed out, the mobile web continues to be a slower experience overall.

Schema tagging is here to stay

The consensus from many speakers was that schema tagging plays a major role in optimizing for voice. Benu Aggarwal was among the most bullish on schema, saying that her content ideation for clients begins with understanding what all the industry-relevant schema tags are and mapping content topics to those tags.

Marketers should be working on their own voice agents

This was the focus of a presentation by Eric Enge, founder and CEO of Stone Temple, and Duane Forrester, VP of Industry Insights at Yext. This is a customized voice that Google, Alexa, etc. hands the user off to once they enter into your brand’s ecosystem to perform certain actions/transactions. The process to develop an effective voice agent is quite complex, involving not just customer personas, but extrapolating those target customers into the development of a brand persona who can embody brand promise. Stone Temple is working on one and is leveraging it to engage incremental traffic the way most brands do with livechat widgets. Enge mentioned that they have even included a digital marketing quiz that can be accessed through Alexa with the query "open the Stone Temple quiz."

SEO is at the heart of customer experience

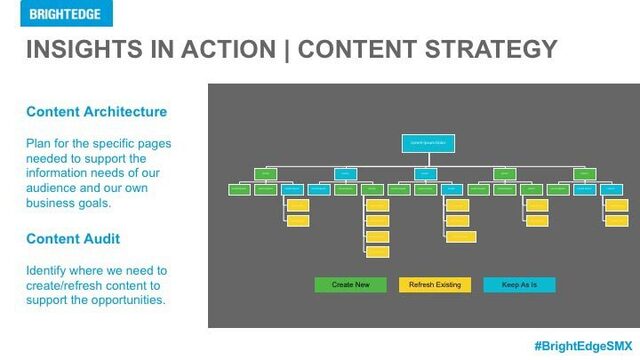

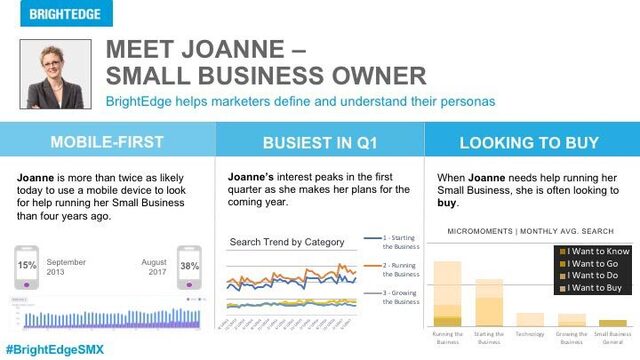

It sometimes feels like SEO spent years as an outsider to the larger marketing group, like an electrician brought in to wire up a new building after every other team finished building everything else. Increasingly, however, SEO and SEO practitioners are being brought into the marketing conversation. As part of that move the data collected from organic search is being utilized not just as a means of measuring the success of the channel but as directional metrics to help guide other marketing efforts. This was the message delivered by our own VP of Strategic Innovation Ken Shults in our Tuesday session. Together with BrightEdge Director of Product Management Michael DeHaven, Ken talked about how insights from organic search can be used to guide further development of the website.  In his example, Ken walked through how to analyze the organic search queries input by members of a specific marketing persona and use them as a kind of backend data source to help guide the refinement of site nav, content taxonomy, and content development itself, echoing the same message given earlier in the day in the voice search panel. If you attended SMX Advanced and are hungry for more SEO and digital marketing insights, be sure to check out the Share Global Insights Tour in San Francisco on October 10.

In his example, Ken walked through how to analyze the organic search queries input by members of a specific marketing persona and use them as a kind of backend data source to help guide the refinement of site nav, content taxonomy, and content development itself, echoing the same message given earlier in the day in the voice search panel. If you attended SMX Advanced and are hungry for more SEO and digital marketing insights, be sure to check out the Share Global Insights Tour in San Francisco on October 10.